Navigation of a Self-Driving Vehicle Using One Fiducial Marker

Paper and Code

Apr 28, 2021

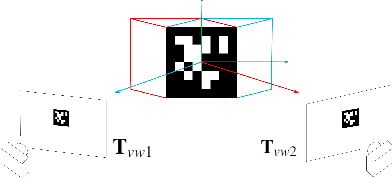

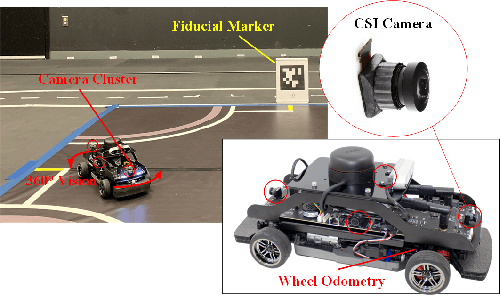

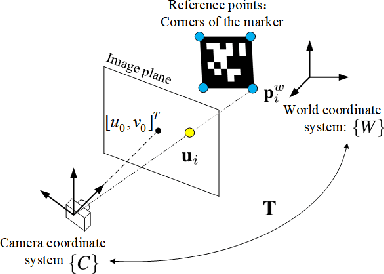

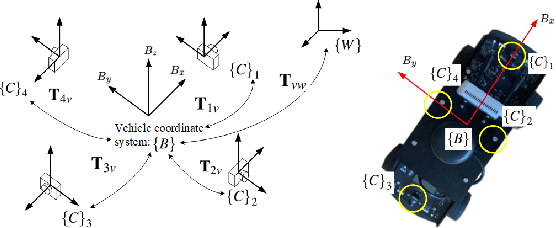

Navigation using only one marker, which contains four artificial features, is a challenging task since camera pose estimation using only four coplanar points suffers from the rotational ambiguity problem in a real-world application. This paper presents a framework of vision-based navigation for a self-driving vehicle equipped with multiple cameras and a wheel odometer. A multiple camera setup is presented for the camera cluster which has 360-degree vision such that our framework solely requires one planar marker. A Kalman-Filter-based fusion method is introduced for the multiple-camera and wheel odometry. Furthermore, an algorithm is proposed to resolve the rotational ambiguity problem using the prediction of the Kalman Filter as additional information. Finally, the lateral and longitudinal controllers are provided. Experiments are conducted to illustrate the effectiveness of the theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge