Navigation in a simplified Urban Flow through Deep Reinforcement Learning

Paper and Code

Sep 26, 2024

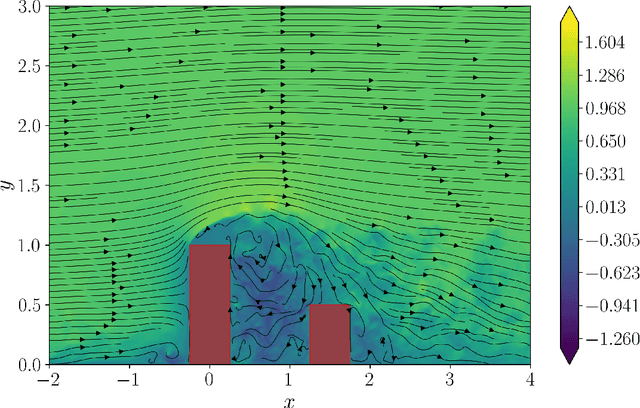

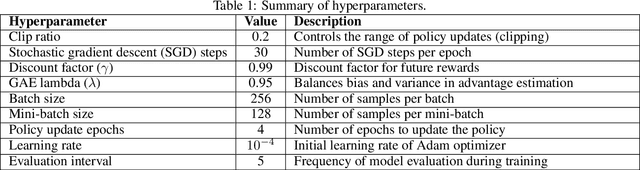

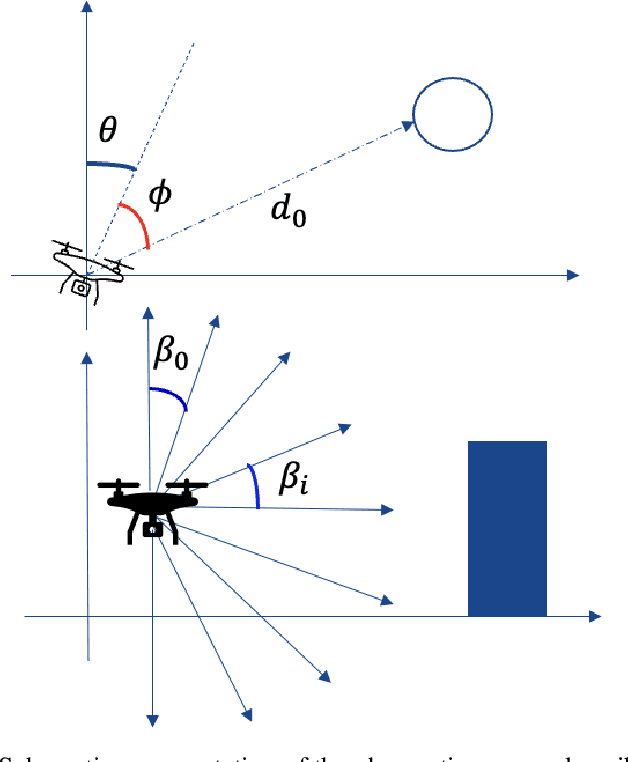

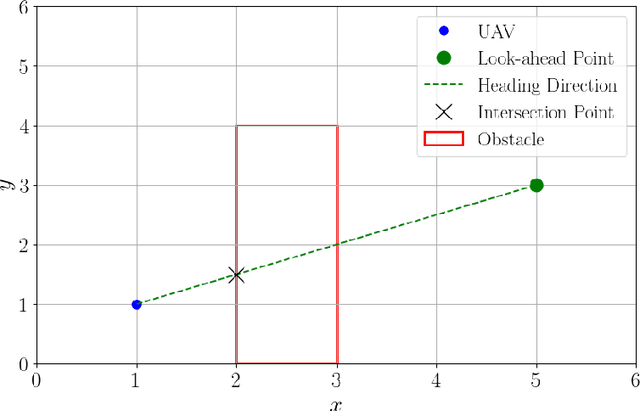

The increasing number of unmanned aerial vehicles (UAVs) in urban environments requires a strategy to minimize their environmental impact, both in terms of energy efficiency and noise reduction. In order to reduce these concerns, novel strategies for developing prediction models and optimization of flight planning, for instance through deep reinforcement learning (DRL), are needed. Our goal is to develop DRL algorithms capable of enabling the autonomous navigation of UAVs in urban environments, taking into account the presence of buildings and other UAVs, optimizing the trajectories in order to reduce both energetic consumption and noise. This is achieved using fluid-flow simulations which represent the environment in which UAVs navigate and training the UAV as an agent interacting with an urban environment. In this work, we consider a domain domain represented by a two-dimensional flow field with obstacles, ideally representing buildings, extracted from a three-dimensional high-fidelity numerical simulation. The presented methodology, using PPO+LSTM cells, was validated by reproducing a simple but fundamental problem in navigation, namely the Zermelo's problem, which deals with a vessel navigating in a turbulent flow, travelling from a starting point to a target location, optimizing the trajectory. The current method shows a significant improvement with respect to both a simple PPO and a TD3 algorithm, with a success rate (SR) of the PPO+LSTM trained policy of 98.7%, and a crash rate (CR) of 0.1%, outperforming both PPO (SR = 75.6%, CR=18.6%) and TD3 (SR=77.4% and CR=14.5%). This is the first step towards DRL strategies which will guide UAVs in a three-dimensional flow field using real-time signals, making the navigation efficient in terms of flight time and avoiding damages to the vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge