Multivariate outlier explanations using Shapley values and Mahalanobis distances

Paper and Code

Oct 18, 2022

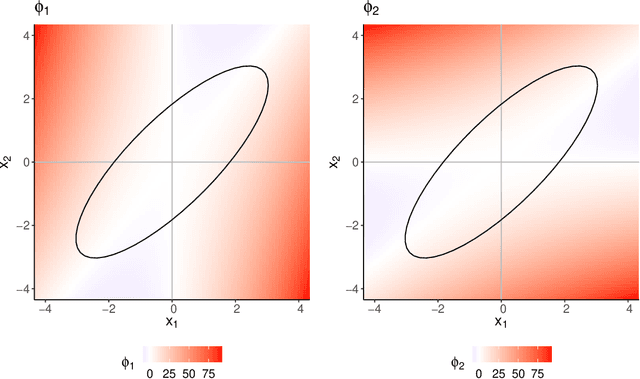

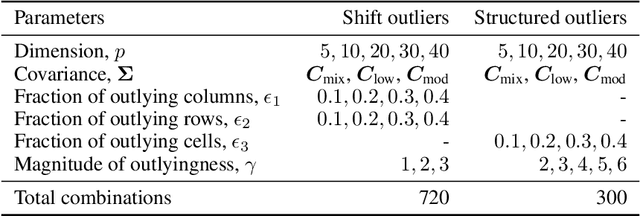

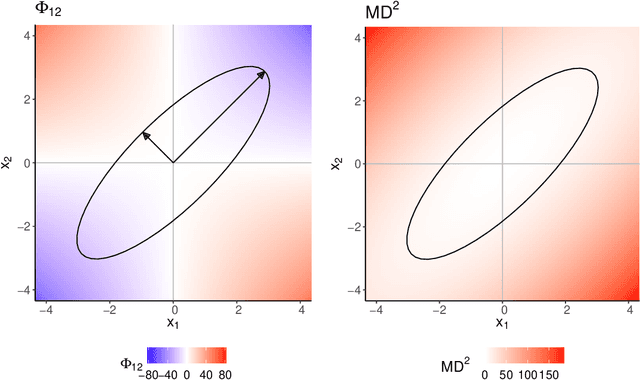

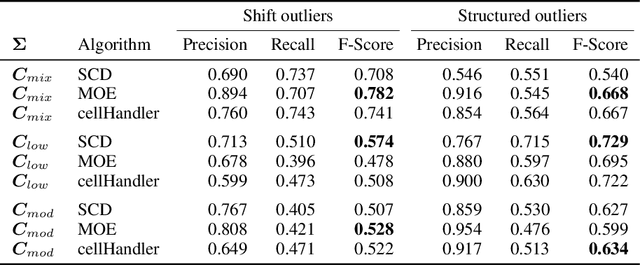

For the purpose of explaining multivariate outlyingness, it is shown that the squared Mahalanobis distance of an observation can be decomposed into outlyingness contributions originating from single variables. The decomposition is obtained using the Shapley value, a well-known concept from game theory that became popular in the context of Explainable AI. In addition to outlier explanation, this concept also relates to the recent formulation of cellwise outlyingness, where Shapley values can be employed to obtain variable contributions for outlying observations with respect to their "expected" position given the multivariate data structure. In combination with squared Mahalanobis distances, Shapley values can be calculated at a low numerical cost, making them even more attractive for outlier interpretation. Simulations and real-world data examples demonstrate the usefulness of these concepts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge