Multiple View Generation and Classification of Mid-wave Infrared Images using Deep Learning

Paper and Code

Aug 18, 2020

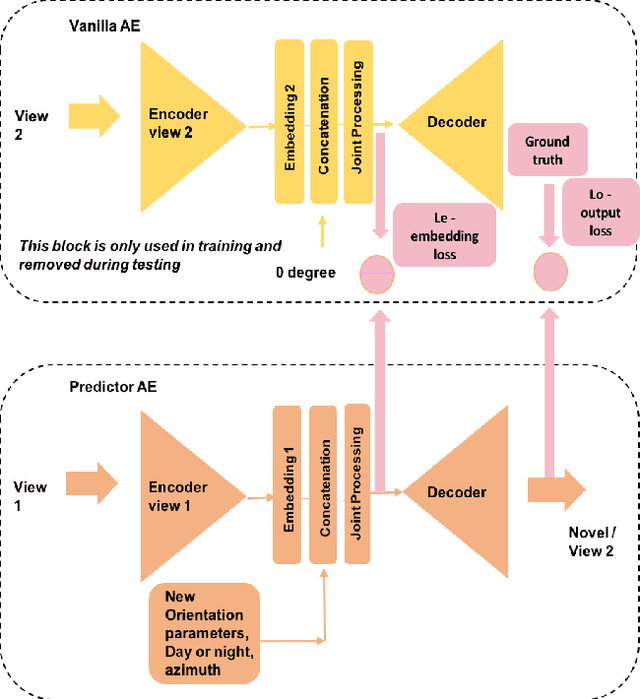

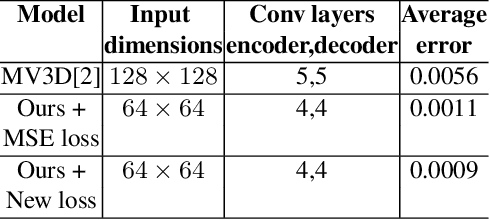

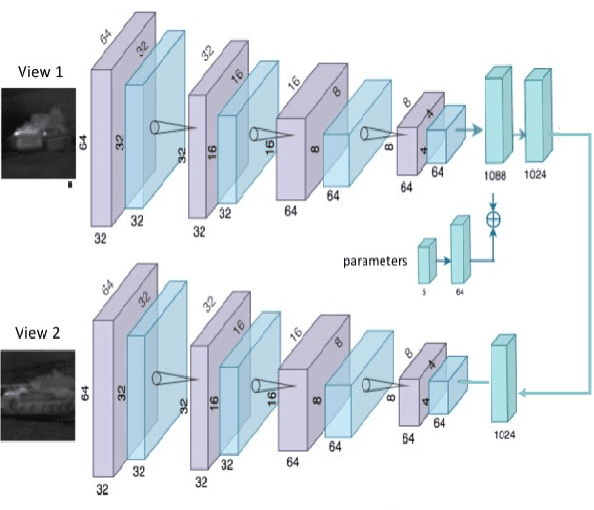

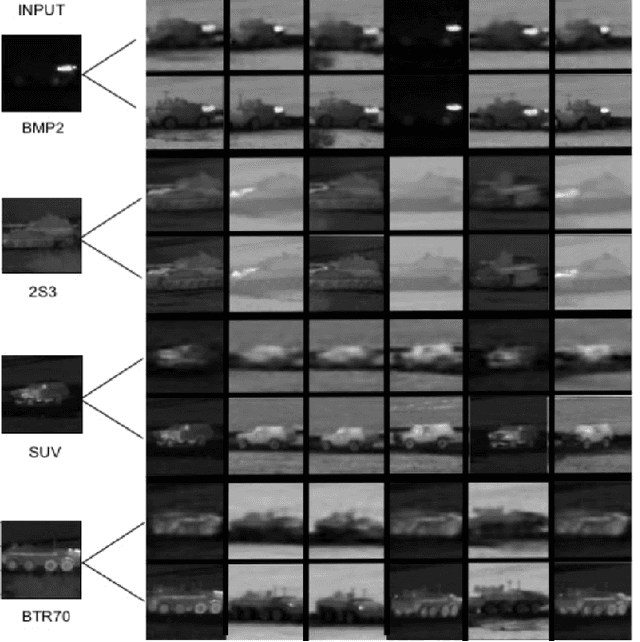

We propose a novel study of generating unseen arbitrary viewpoints for infrared imagery in the non-linear feature subspace . Current methods use synthetic images and often result in blurry and distorted outputs. Our approach on the contrary understands the semantic information in natural images and encapsulates it such that our predicted unseen views possess good 3D representations. We further explore the non-linear feature subspace and conclude that our network does not operate in the Euclidean subspace but rather in the Riemannian subspace. It does not learn the geometric transformation for predicting the position of the pixel in the new image but rather learns the manifold. To this end, we use t-SNE visualisations to conduct a detailed analysis of our network and perform classification of generated images as a low-shot learning task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge