Multi-Timescale Modeling of Human Behavior

Paper and Code

Nov 16, 2022

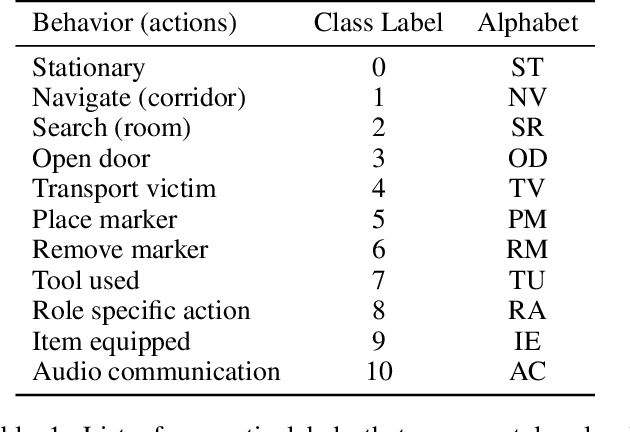

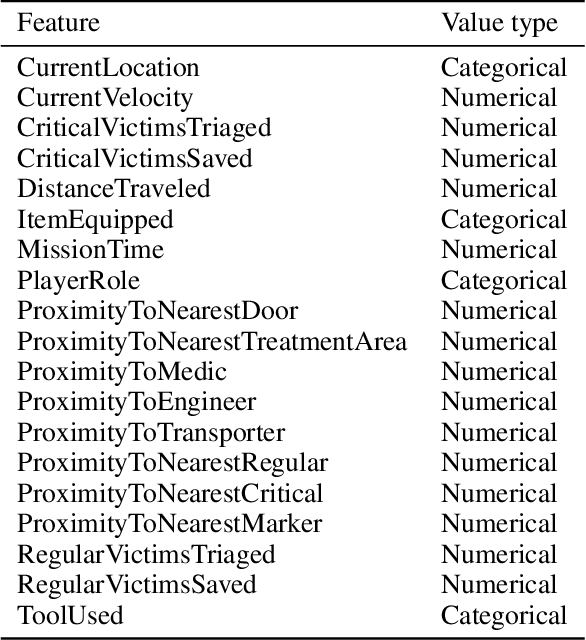

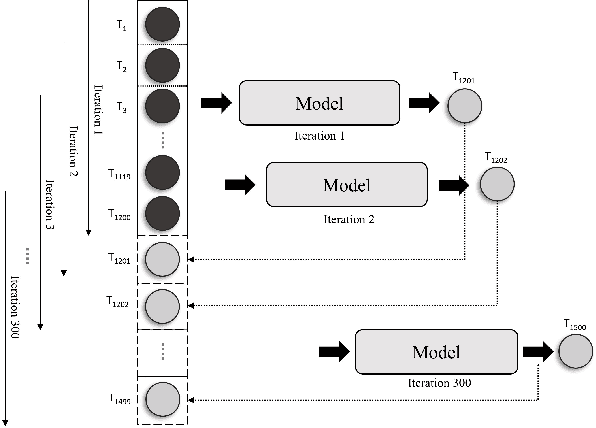

In recent years, the role of artificially intelligent (AI) agents has evolved from being basic tools to socially intelligent agents working alongside humans towards common goals. In such scenarios, the ability to predict future behavior by observing past actions of their human teammates is highly desirable in an AI agent. Goal-oriented human behavior is complex, hierarchical, and unfolds across multiple timescales. Despite this observation, relatively little attention has been paid towards using multi-timescale features to model such behavior. In this paper, we propose an LSTM network architecture that processes behavioral information at multiple timescales to predict future behavior. We demonstrate that our approach for modeling behavior in multiple timescales substantially improves prediction of future behavior compared to methods that do not model behavior at multiple timescales. We evaluate our architecture on data collected in an urban search and rescue scenario simulated in a virtual Minecraft-based testbed, and compare its performance to that of a number of valid baselines as well as other methods that do not process inputs at multiple timescales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge