Multi-stage domain adversarial style reconstruction for cytopathological image stain normalization

Paper and Code

Sep 11, 2019

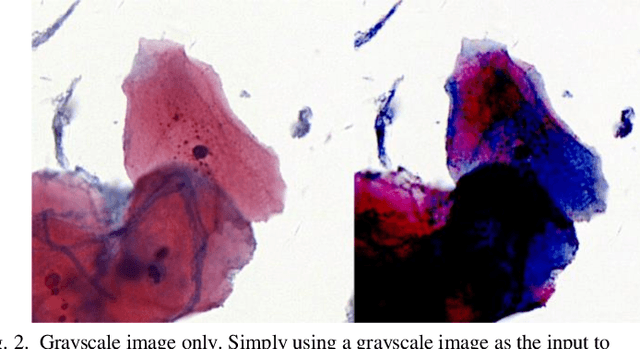

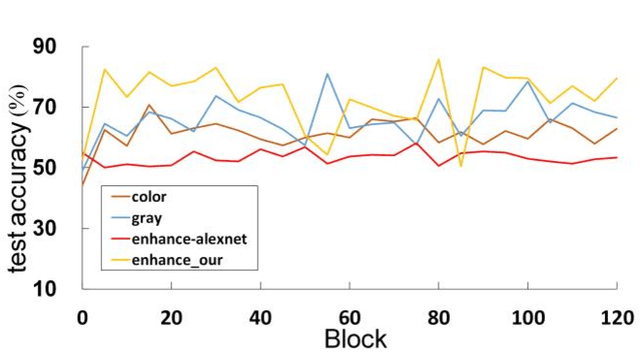

The different stain styles of cytopathological images have a negative effect on the generalization ability of automated image analysis algorithms. This article proposes a new framework that normalizes the stain style for cytopathological images through a stain removal module and a multi-stage domain adversarial style reconstruction module. We convert colorful images into grayscale images with a color-encoding mask. Using the mask, reconstructed images retain their basic color without red and blue mixing, which is important for cytopathological image interpretation. The style reconstruction module consists of per-pixel regression with intradomain adversarial learning, inter-domain adversarial learning, and optional task-based refining. Per-pixel regression with intradomain adversarial learning establishes the generative network from the decolorized input to the reconstructed output. The interdomain adversarial learning further reduces the difference in stain style. The generation network can be optimized by combining it with the task network. Experimental results show that the proposed techniques help to optimize the generation network. The average accuracy increases from 75.41% to 84.79% after the intra-domain adversarial learning, and to 87.00% after interdomain adversarial learning. Under the guidance of the task network, the average accuracy rate reaches 89.58%. The proposed method achieves unsupervised stain normalization of cytopathological images, while preserving the cell structure, texture structure, and cell color properties of the image. This method overcomes the problem of generalizing the task models between different stain styles of cytopathological images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge