Multi-modal Misinformation Detection: Approaches, Challenges and Opportunities

Paper and Code

Apr 01, 2022

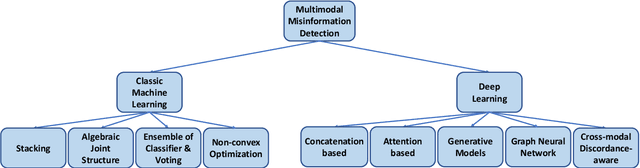

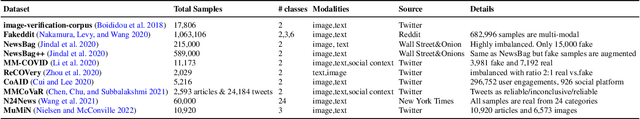

As social media platforms are evolving from text-based forums into multi-modal environments, the nature of misinformation in social media is also changing accordingly. Taking advantage of the fact that visual modalities such as images and videos are more favorable and attractive to the users, and textual contents are sometimes skimmed carelessly, misinformation spreaders have recently targeted contextual correlations between modalities e.g., text and image. Thus, many research efforts have been put into development of automatic techniques for detecting possible cross-modal discordances in web-based media. In this work, we aim to analyze, categorize and identify existing approaches in addition to challenges and shortcomings they face in order to unearth new opportunities in furthering the research in the field of multi-modal misinformation detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge