Multi-Fingered Active Grasp Learning

Paper and Code

Jun 06, 2020

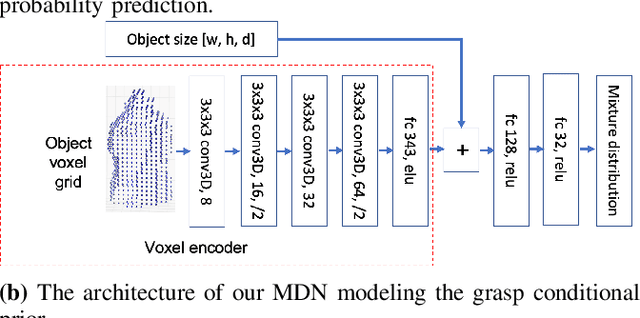

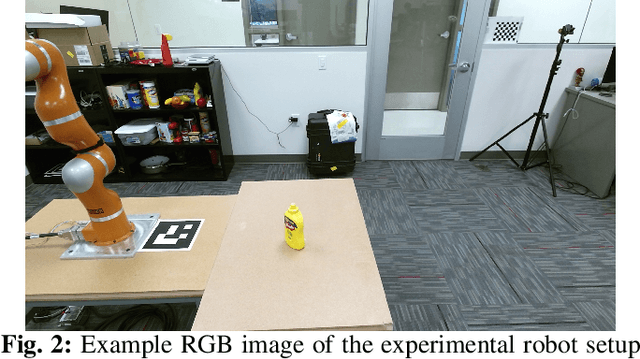

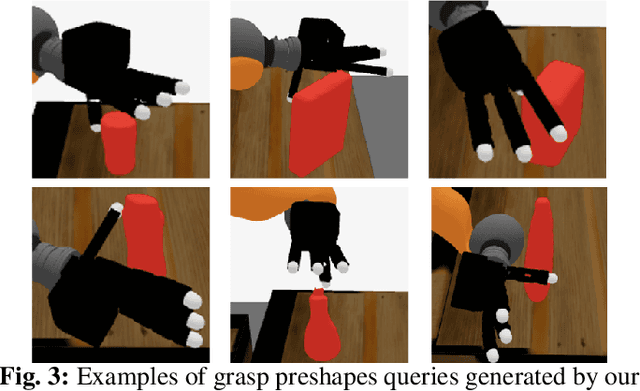

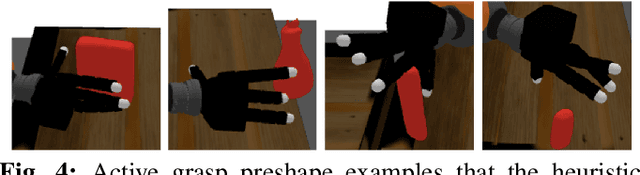

Learning-based approaches to grasp planning are preferred over analytical methods due to their ability to better generalize to new, partially observed objects. However, data collection remains one of the biggest bottlenecks for grasp learning methods, particularly for multi-fingered hands. The relatively high dimensional configuration space of the hands coupled with the diversity of objects common in daily life requires a significant number of samples to produce robust and confident grasp success classifiers. In this paper, we present the first active learning approach to grasping that searches over the grasp configuration space and classifier confidence in a unified manner. Our real-robot grasping experiment shows our active grasp planner using less training data achieves comparable success rates with a passive supervised planner trained with geometrical grasping data. We also compute the differential entropy to demonstrate our active learner generates grasps with larger diversity than passive supervised learning using more heuristic data. We base our approach on recent success in planning multi-fingered grasps as probabilistic inference with a learned neural network likelihood function. We embed this within a multi-armed bandit formulation of sample selection. We show that our active grasp learning approach uses fewer training samples to produce grasp success rates comparable with the passive supervised learning method trained with grasping data generated by an analytical planner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge