Multi-Augmentation for Efficient Visual Representation Learning for Self-supervised Pre-training

Paper and Code

May 24, 2022

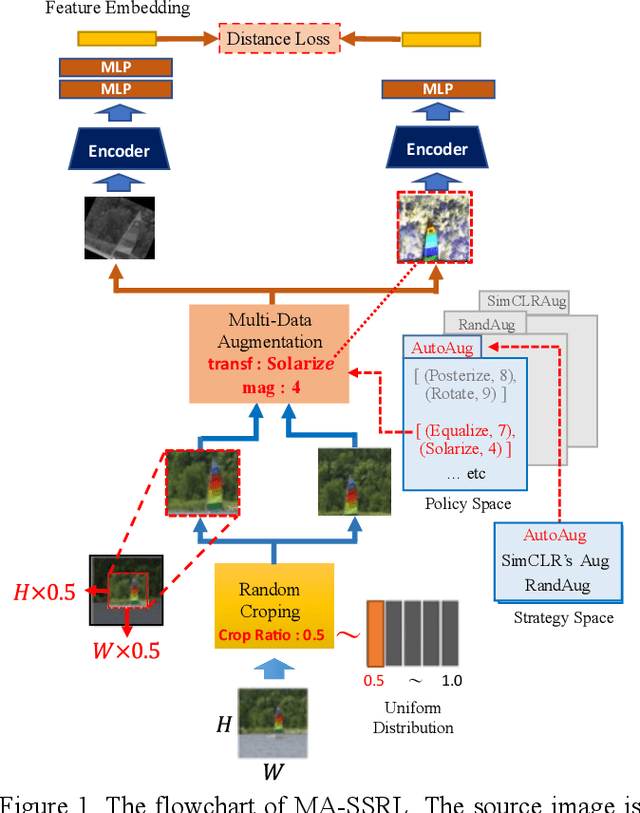

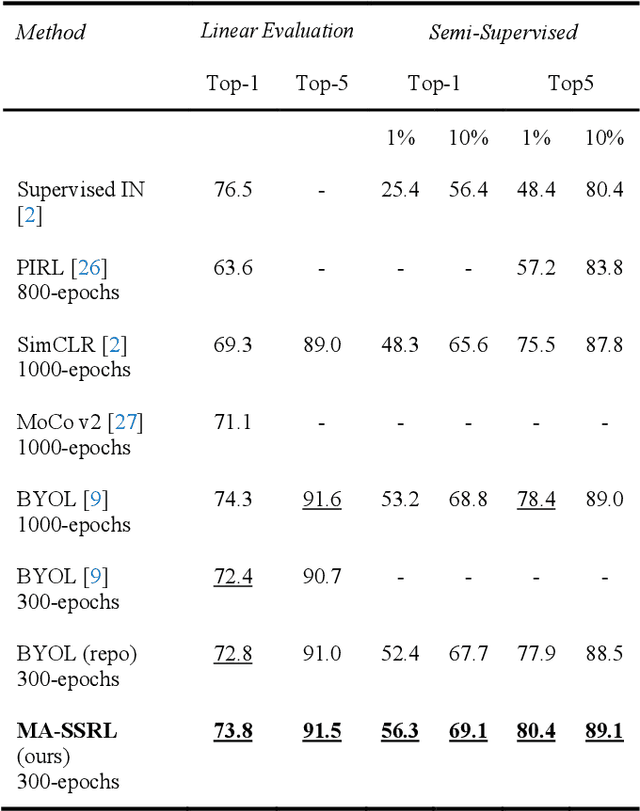

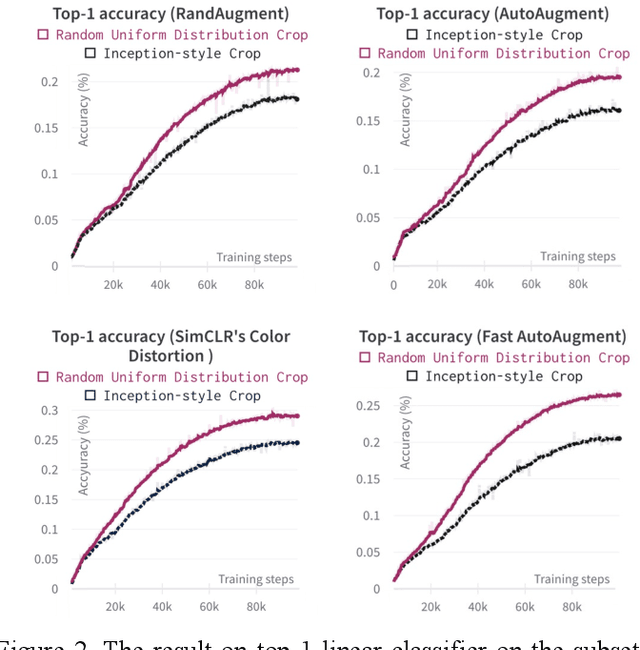

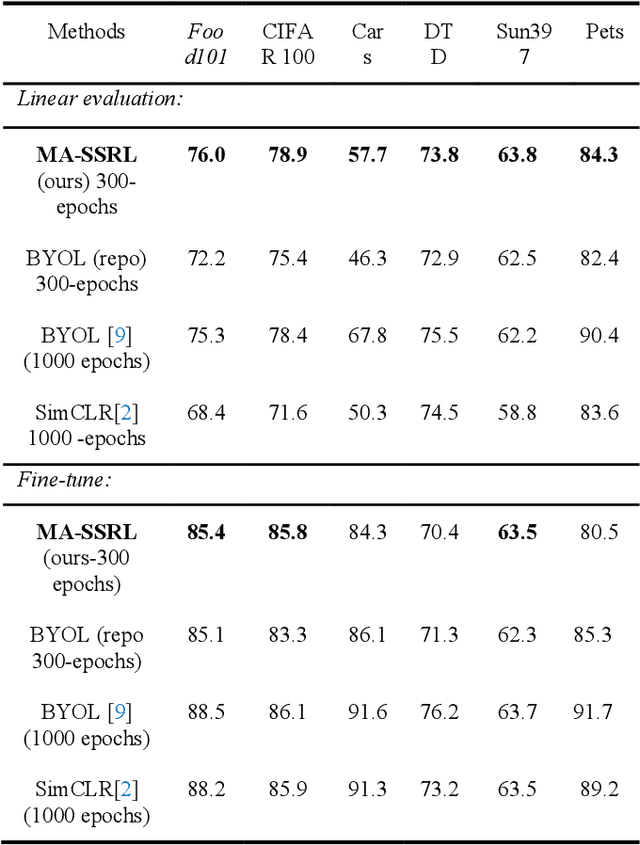

In recent years, self-supervised learning has been studied to deal with the limitation of available labeled-dataset. Among the major components of self-supervised learning, the data augmentation pipeline is one key factor in enhancing the resulting performance. However, most researchers manually designed the augmentation pipeline, and the limited collections of transformation may cause the lack of robustness of the learned feature representation. In this work, we proposed Multi-Augmentations for Self-Supervised Representation Learning (MA-SSRL), which fully searched for various augmentation policies to build the entire pipeline to improve the robustness of the learned feature representation. MA-SSRL successfully learns the invariant feature representation and presents an efficient, effective, and adaptable data augmentation pipeline for self-supervised pre-training on different distribution and domain datasets. MA-SSRL outperforms the previous state-of-the-art methods on transfer and semi-supervised benchmarks while requiring fewer training epochs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge