Multi-Attention-Network for Semantic Segmentation of High-Resolution Remote Sensing Images

Paper and Code

Sep 03, 2020

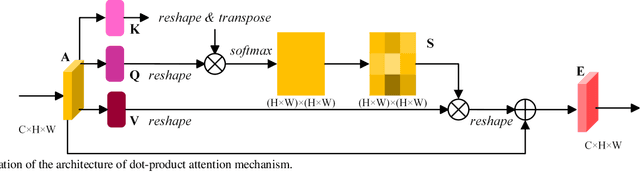

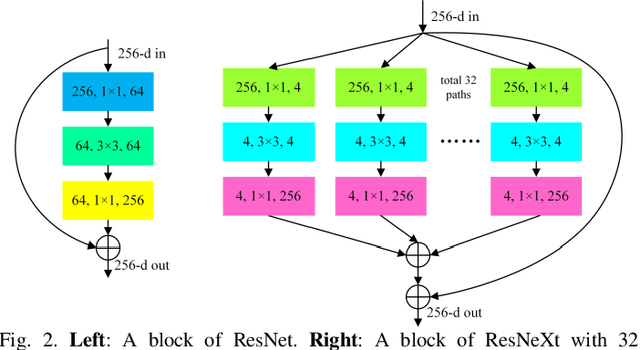

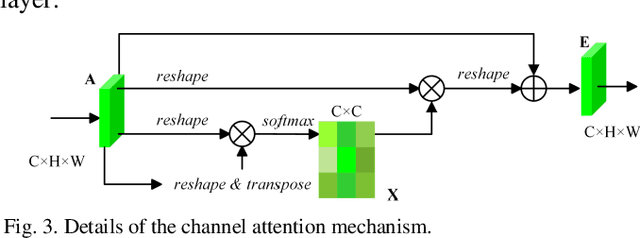

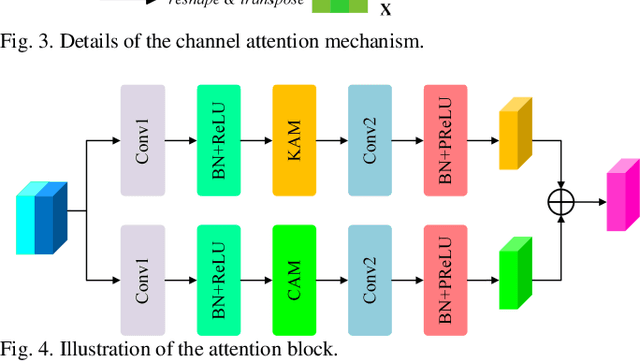

Semantic segmentation of remote sensing images plays an important role in land resource management, yield estimation, and economic assessment. Even though the semantic segmentation of remote sensing images has been prominently improved by convolutional neural networks, there are still several limitations contained in standard models. First, for encoder-decoder architectures like U-Net, the utilization of multi-scale features causes overuse of information, where similar low-level features are exploited at multiple scales for multiple times. Second, long-range dependencies of feature maps are not sufficiently explored, leading to feature representations associated with each semantic class are not optimal. Third, despite the dot-product attention mechanism has been introduced and harnessed widely in semantic segmentation to model long-range dependencies, the high time and space complexities of attention impede the usage of attention in application scenarios with large input. In this paper, we proposed a Multi-Attention-Network (MANet) to remedy these drawbacks, which extracts contextual dependencies by multi efficient attention mechanisms. A novel attention mechanism named kernel attention with linear complexity is proposed to alleviate the high computational demand of attention. Based on kernel attention and channel attention, we integrate local feature maps extracted by ResNeXt-101 with their corresponding global dependencies, and adaptively signalize interdependent channel maps. Experiments conducted on two remote sensing image datasets captured by variant satellites demonstrate that the performance of our MANet transcends the DeepLab V3+, PSPNet, FastFCN, and other baseline algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge