Moving Object Segmentation in Jittery Videos by Stabilizing Trajectories Modeled in Kendall's Shape Space

Paper and Code

Aug 14, 2018

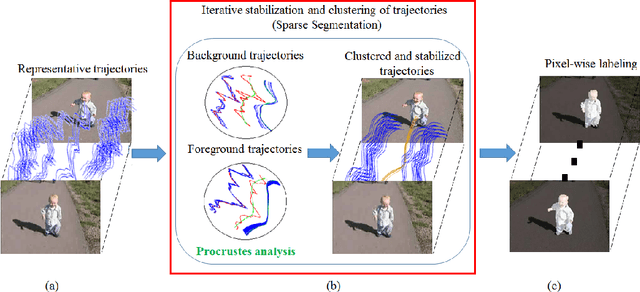

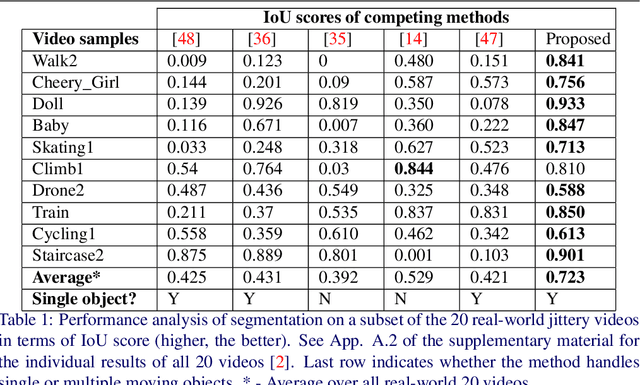

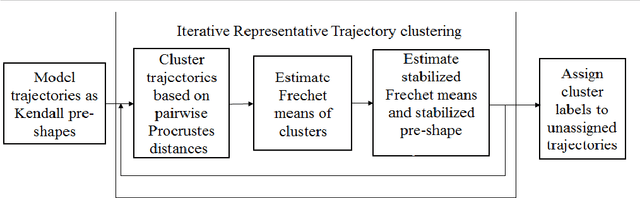

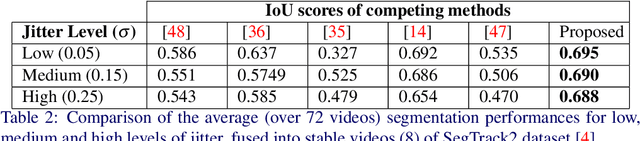

Moving Object Segmentation is a challenging task for jittery/wobbly videos. For jittery videos, the non-smooth camera motion makes discrimination between foreground objects and background layers hard to solve. While most recent works for moving video object segmentation fail in this scenario, our method generates an accurate segmentation of a single moving object. The proposed method performs a sparse segmentation, where frame-wise labels are assigned only to trajectory coordinates, followed by the pixel-wise labeling of frames. The sparse segmentation involving stabilization and clustering of trajectories in a 3-stage iterative process. At the 1st stage, the trajectories are clustered using pairwise Procrustes distance as a cue for creating an affinity matrix. The 2nd stage performs a block-wise Procrustes analysis of the trajectories and estimates Frechet means (in Kendall's shape space) of the clusters. The Frechet means represent the average trajectories of the motion clusters. An optimization function has been formulated to stabilize the Frechet means, yielding stabilized trajectories at the 3rd stage. The accuracy of the motion clusters are iteratively refined, producing distinct groups of stabilized trajectories. Next, the labels obtained from the sparse segmentation are propagated for pixel-wise labeling of the frames, using a GraphCut based energy formulation. Use of Procrustes analysis and energy minimization in Kendall's shape space for moving object segmentation in jittery videos, is the novelty of this work. Second contribution comes from experiments performed on a dataset formed of 20 real-world natural jittery videos, with manually annotated ground truth. Experiments are done with controlled levels of artificial jitter on videos of SegTrack2 dataset. Qualitative and quantitative results indicate the superiority of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge