Mono-hydra: Real-time 3D scene graph construction from monocular camera input with IMU

Paper and Code

Aug 10, 2023

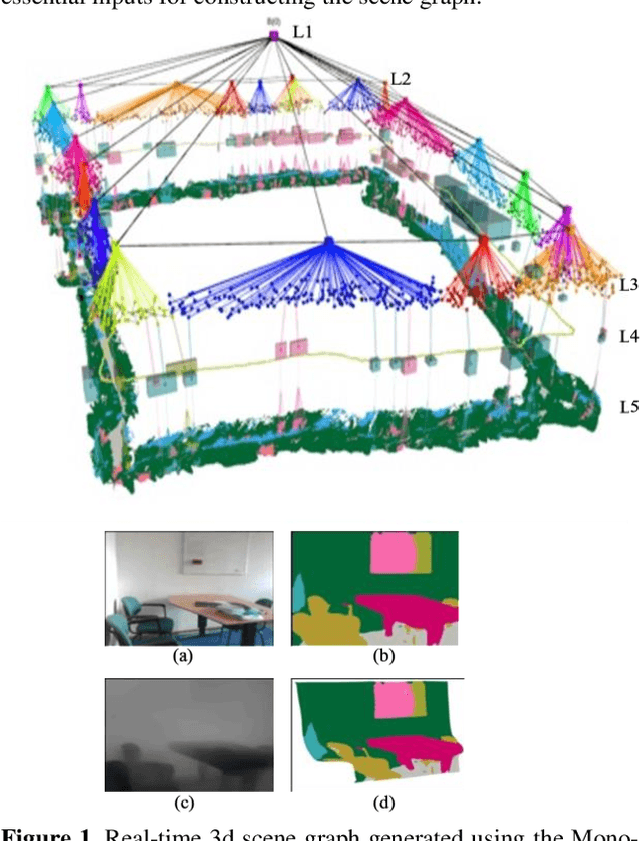

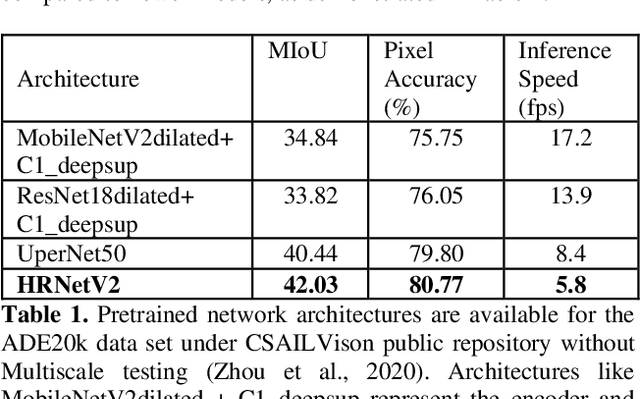

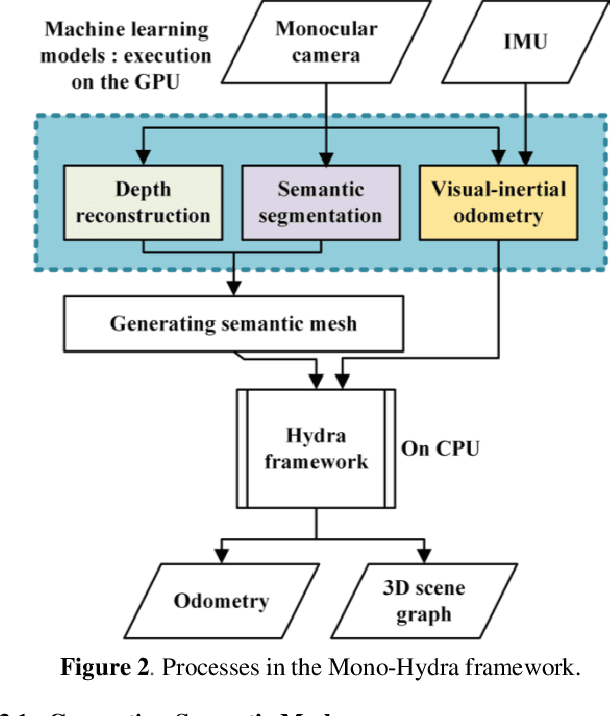

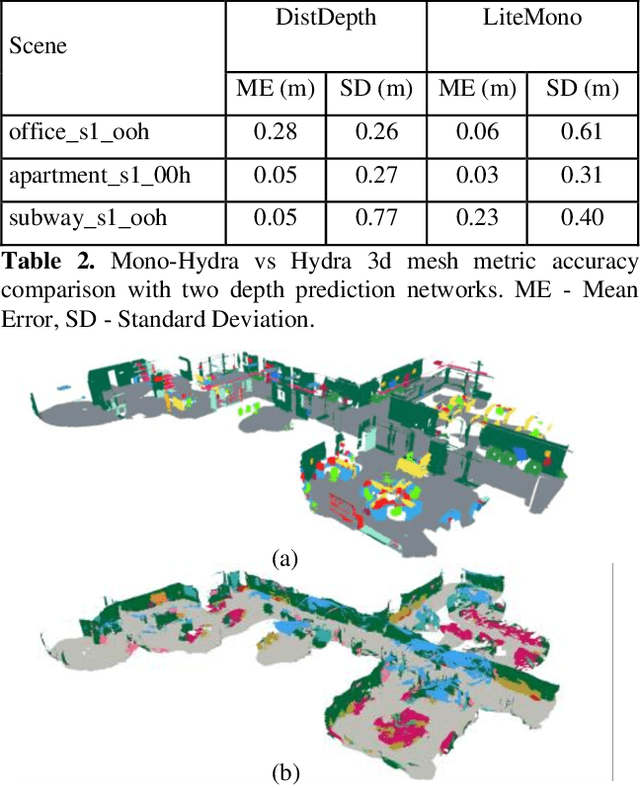

The ability of robots to autonomously navigate through 3D environments depends on their comprehension of spatial concepts, ranging from low-level geometry to high-level semantics, such as objects, places, and buildings. To enable such comprehension, 3D scene graphs have emerged as a robust tool for representing the environment as a layered graph of concepts and their relationships. However, building these representations using monocular vision systems in real-time remains a difficult task that has not been explored in depth. This paper puts forth a real-time spatial perception system Mono-Hydra, combining a monocular camera and an IMU sensor setup, focusing on indoor scenarios. However, the proposed approach is adaptable to outdoor applications, offering flexibility in its potential uses. The system employs a suite of deep learning algorithms to derive depth and semantics. It uses a robocentric visual-inertial odometry (VIO) algorithm based on square-root information, thereby ensuring consistent visual odometry with an IMU and a monocular camera. This system achieves sub-20 cm error in real-time processing at 15 fps, enabling real-time 3D scene graph construction using a laptop GPU (NVIDIA 3080). This enhances decision-making efficiency and effectiveness in simple camera setups, augmenting robotic system agility. We make Mono-Hydra publicly available at: https://github.com/UAV-Centre-ITC/Mono_Hydra

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge