Modelling urban networks using Variational Autoencoders

Paper and Code

May 14, 2019

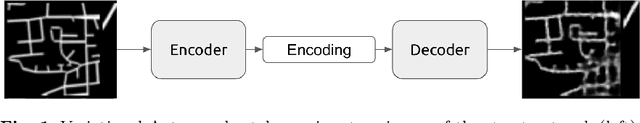

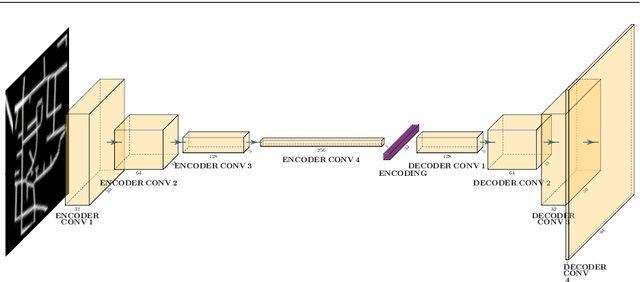

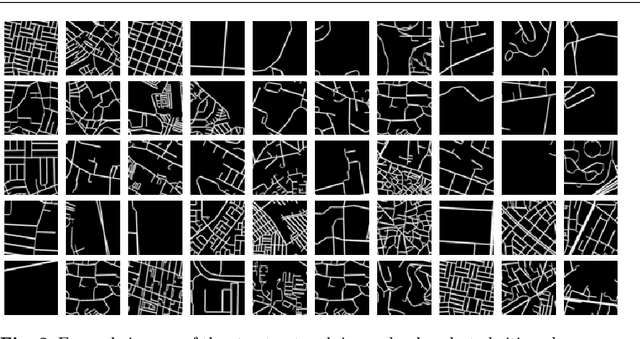

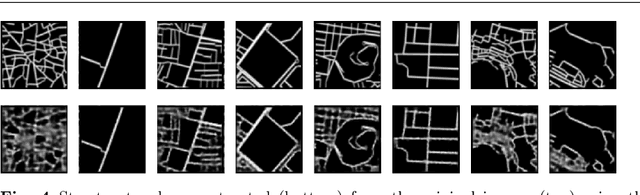

A long-standing question for urban and regional planners pertains to the ability to describe urban patterns quantitatively. Cities' transport infrastructure, particularly street networks, provides an invaluable source of information about the urban patterns generated by peoples' movements and their interactions. With the increasing availability of street network datasets and the advancements in deep learning methods, we are presented with an unprecedented opportunity to push the frontiers of urban modelling towards more data-driven and accurate models of urban forms. In this study, we present our initial work on applying deep generative models to urban street network data to create spatially explicit urban models. We based our work on Variational Autoencoders (VAEs) which are deep generative models that have recently gained their popularity due to the ability to generate realistic images. Initial results show that VAEs are capable of capturing key high-level urban network metrics using low-dimensional vectors and generating new urban forms of complexity matching the cities captured in the street network data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge