Modeling Inter-Speaker Relationship in XLNet for Contextual Spoken Language Understanding

Paper and Code

Oct 28, 2019

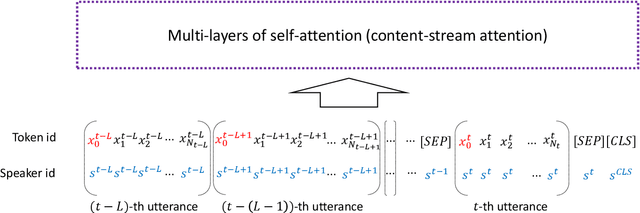

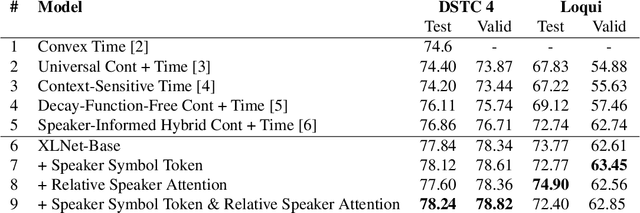

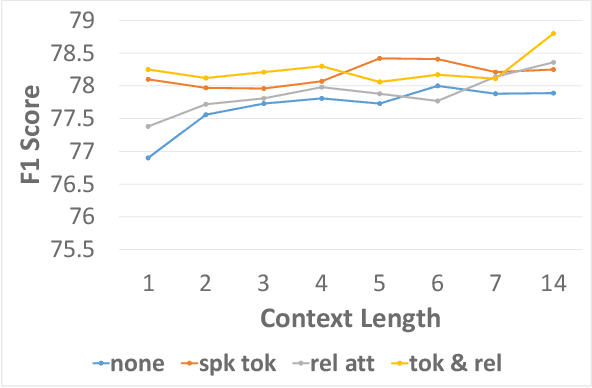

We propose two methods to capture relevant history information in a multi-turn dialogue by modeling inter-speaker relationship for spoken language understanding (SLU). Our methods are tailored for and therefore compatible with XLNet, which is a state-of-the-art pretrained model, so we verified our models built on the top of XLNet. In our experiments, all models achieved higher accuracy than state-of-the-art contextual SLU models on two benchmark datasets. Analysis on the results demonstrated that the proposed methods are effective to improve SLU accuracy of XLNet. These methods to identify important dialogue history will be useful to alleviate ambiguity in SLU of the current utterance.

* submitted to ICASSP 2020

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge