Modeling and Optimization of Human-machine Interaction Processes via the Maximum Entropy Principle

Paper and Code

Mar 17, 2019

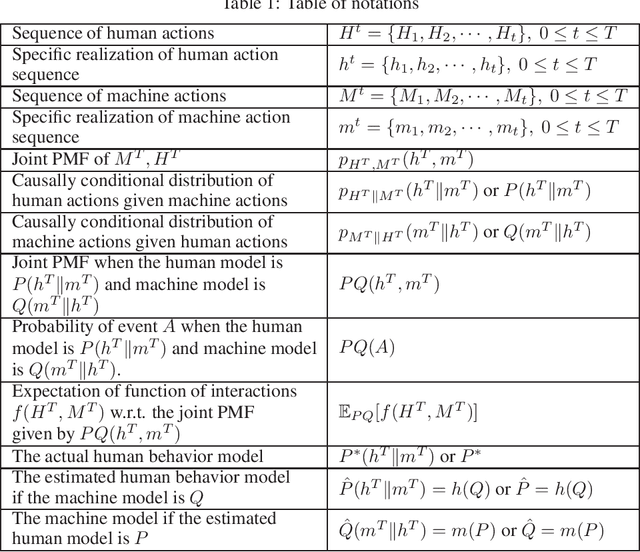

We propose a data-driven framework to enable the modeling and optimization of human-machine interaction processes, e.g., systems aimed at assisting humans in decision-making or learning, work-load allocation, and interactive advertising. This is a challenging problem for several reasons. First, humans' behavior is hard to model or infer, as it may reflect biases, long term memory, and sensitivity to sequencing, i.e., transience and exponential complexity in the length of the interaction. Second, due to the interactive nature of such processes, the machine policy used to engage with a human may bias possible data-driven inferences. Finally, in choosing machine policies that optimize interaction rewards, one must, on the one hand, avoid being overly sensitive to error/variability in the estimated human model, and on the other, being overly deterministic/predictable which may result in poor human 'engagement' in the interaction. To meet these challenges, we propose a robust approach, based on the maximum entropy principle, which iteratively estimates human behavior and optimizes the machine policy--Alternating Entropy-Reward Ascent (AREA) algorithm. We characterize AREA, in terms of its space and time complexity and convergence. We also provide an initial validation based on synthetic data generated by an established noisy nonlinear model for human decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge