Model Selection via MCRB Optimization

Paper and Code

Apr 05, 2025

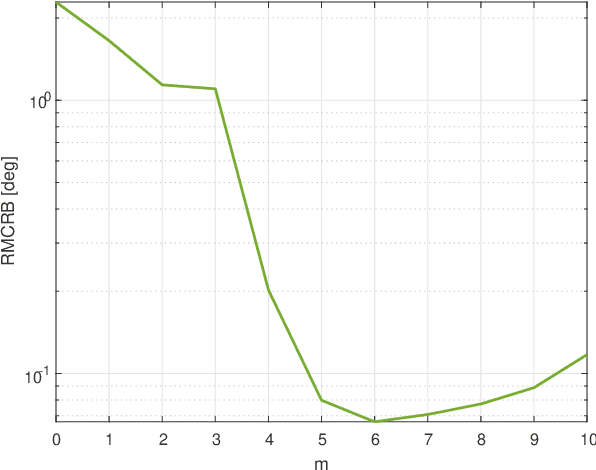

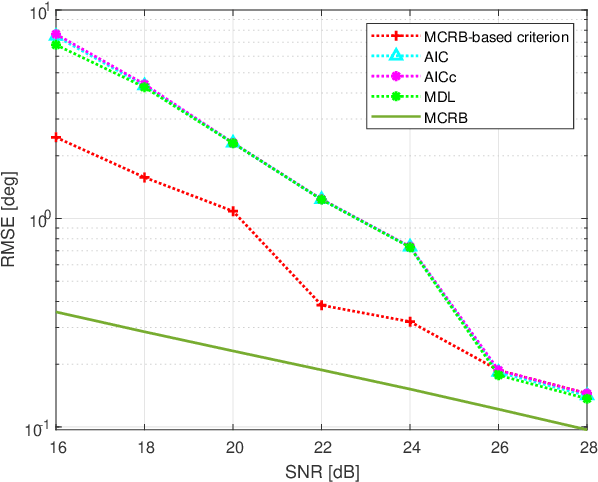

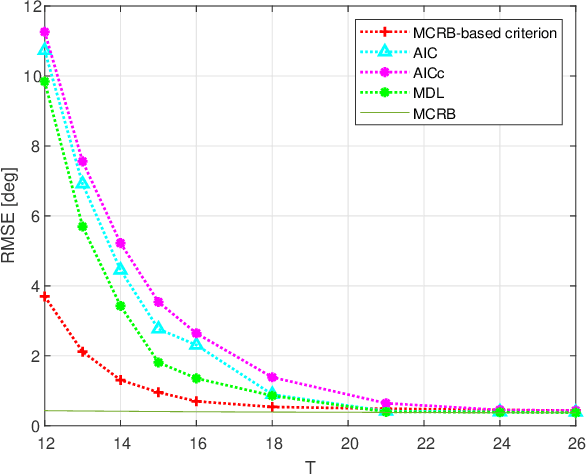

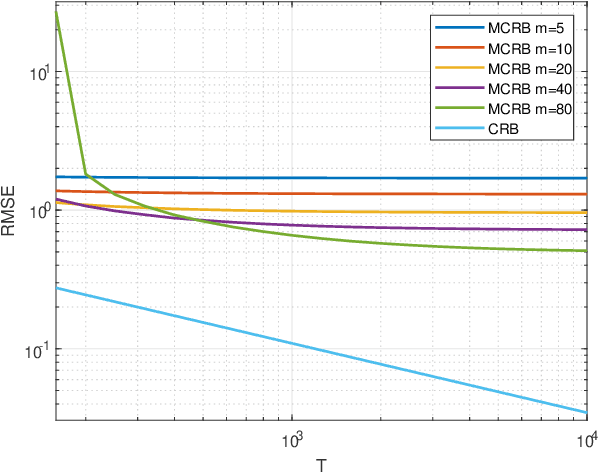

In many estimation theory and statistical analysis problems, the true data model is unknown, or partially unknown. To describe the model generating the data, parameterized models of some degree are used. A question that arises is which model should be used to best approximate the true model, a.k.a. model selection. In the field of machine learning, it is encountered in the form of architecture types of neural networks, number of model parameters, etc. In this paper, we propose a new model selection criterion, based on the misspecified Cramer-Rao bound (MCRB) for mean-squared-error (MSE) performance. The criterion selects the model in which the bound on the estimated parameters MSE is the lowest, compared to other candidate models. Its goal is to minimize the MSE with-respect-to (w.r.t.) the model. The criterion is applied to the problems of direction-of-arrival (DOA) estimation under unknown clutter / interference, and spectrum estimation of auto-regressive (AR) model. It is shown to incorporate the bias-variance trade-off, and outperform the Akaike information criterion (AIC), finite-sample corrected AIC (AICc), and minimum description length (MDL) in terms of MSE performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge