Model-Aware Regularization For Learning Approaches To Inverse Problems

Paper and Code

Jun 18, 2020

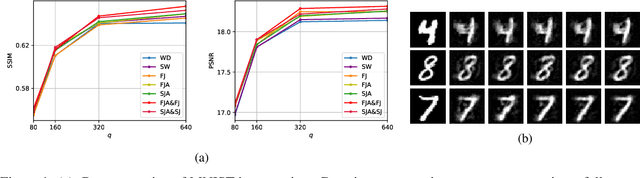

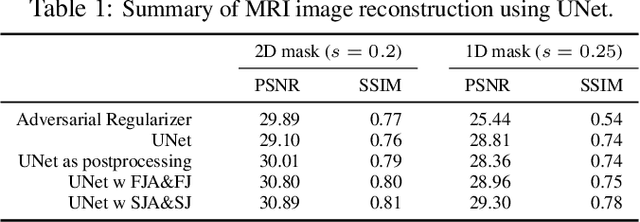

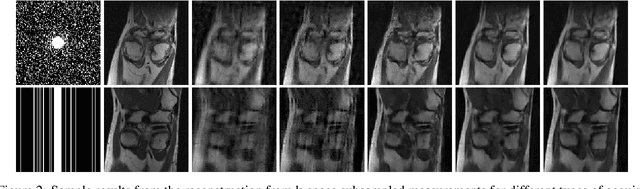

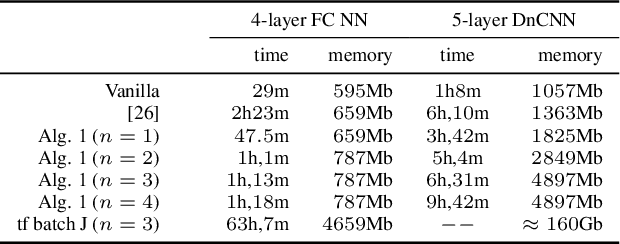

There are various inverse problems -- including reconstruction problems arising in medical imaging -- where one is often aware of the forward operator that maps variables of interest to the observations. It is therefore natural to ask whether such knowledge of the forward operator can be exploited in deep learning approaches increasingly used to solve inverse problems. In this paper, we provide one such way via an analysis of the generalisation error of deep learning methods applicable to inverse problems. In particular, by building on the algorithmic robustness framework, we offer a generalisation error bound that encapsulates key ingredients associated with the learning problem such as the complexity of the data space, the size of the training set, the Jacobian of the deep neural network and the Jacobian of the composition of the forward operator with the neural network. We then propose a 'plug-and-play' regulariser that leverages the knowledge of the forward map to improve the generalization of the network. We likewise also propose a new method allowing us to tightly upper bound the Lipschitz constants of the relevant functions that is much more computational efficient than existing ones. We demonstrate the efficacy of our model-aware regularised deep learning algorithms against other state-of-the-art approaches on inverse problems involving various sub-sampling operators such as those used in classical compressed sensing setup and accelerated Magnetic Resonance Imaging (MRI).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge