Mixed Prototype Consistency Learning for Semi-supervised Medical Image Segmentation

Paper and Code

Apr 16, 2024

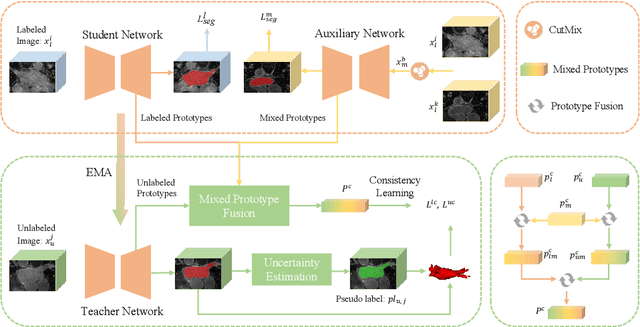

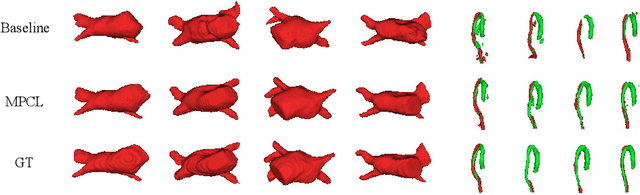

Recently, prototype learning has emerged in semi-supervised medical image segmentation and achieved remarkable performance. However, the scarcity of labeled data limits the expressiveness of prototypes in previous methods, potentially hindering the complete representation of prototypes for class embedding. To address this problem, we propose the Mixed Prototype Consistency Learning (MPCL) framework, which includes a Mean Teacher and an auxiliary network. The Mean Teacher generates prototypes for labeled and unlabeled data, while the auxiliary network produces additional prototypes for mixed data processed by CutMix. Through prototype fusion, mixed prototypes provide extra semantic information to both labeled and unlabeled prototypes. High-quality global prototypes for each class are formed by fusing two enhanced prototypes, optimizing the distribution of hidden embeddings used in consistency learning. Extensive experiments on the left atrium and type B aortic dissection datasets demonstrate MPCL's superiority over previous state-of-the-art approaches, confirming the effectiveness of our framework. The code will be released soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge