Misclassification-Aware Gaussian Smoothing improves Robustness against Domain Shifts

Paper and Code

Apr 02, 2021

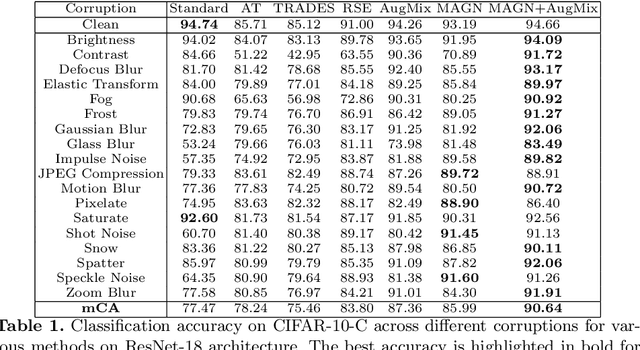

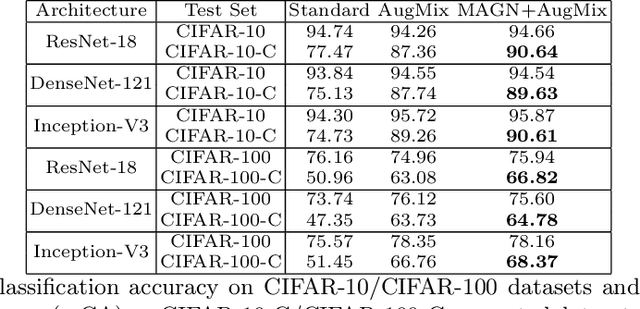

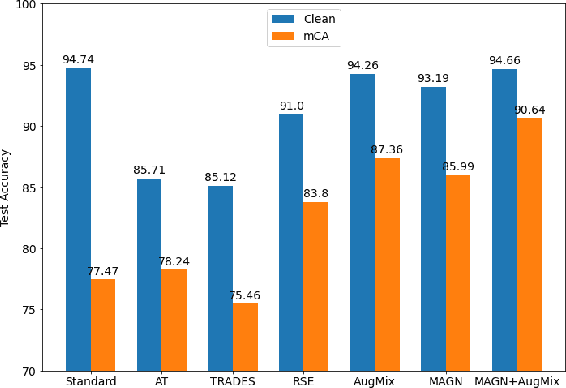

Deep neural networks achieve high prediction accuracy when the train and test distributions coincide. However, in practice various types of corruptions can deviate from this setup and performance can be heavily degraded. There have been only a few methods to address generalization in presence of unexpected domain shifts observed during deployment. In this paper, a misclassification-aware Gaussian smoothing approach is presented to improve the robustness of image classifiers against a variety of corruptions while maintaining clean accuracy. The intuition behind our proposed misclassification-aware objective is revealed through bounds on the local loss deviation in the small-noise regime. When our method is coupled with additional data augmentations, it is empirically shown to improve upon the state-of-the-art in robustness and uncertainty calibration on several image classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge