MineNav: An Expandable Synthetic Dataset Based on Minecraft for Aircraft Visual Navigation

Paper and Code

Aug 19, 2020

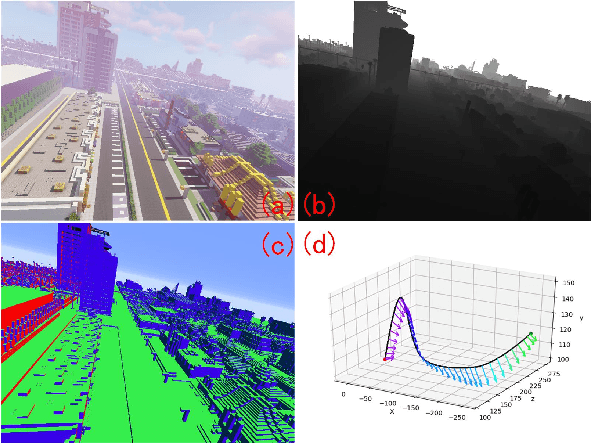

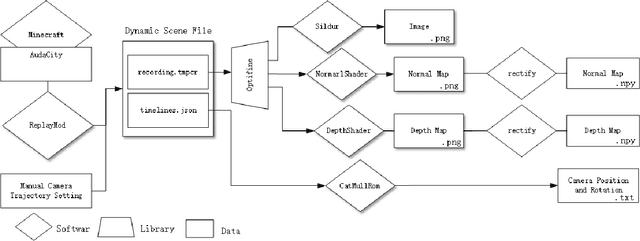

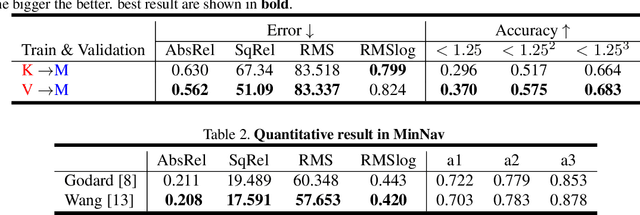

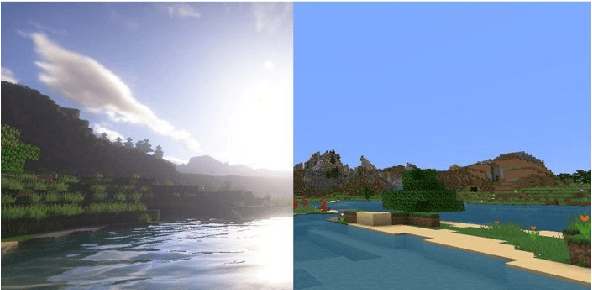

We propose a simply method to generate high quality synthetic dataset based on open-source game Minecraft includes rendered image, Depth map, surface normal map, and 6-dof camera trajectory. This dataset has a perfect ground-truth generated by plug-in program, and thanks for the large game's community, there is an extremely large number of 3D open-world environment, users can find suitable scenes for shooting and build data sets through it and they can also build scenes in-game. as such, We don't need to worry about manual over fitting caused by too small datasets. what's more, there is also a shader community which We can use to minimize data bias between rendered images and real-images as little as possible. Last but not least, we now provide three tools to generate the data for depth prediction ,surface normal prediction and visual odometry, user can also develop the plug-in module for other vision task like segmentation or optical flow prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge