Metrics for Evaluating Dialogue Strategies in a Spoken Language System

Paper and Code

Dec 17, 1996

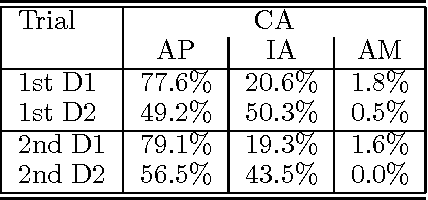

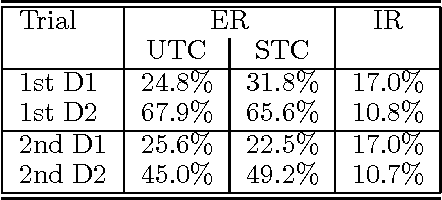

In this paper, we describe a set of metrics for the evaluation of different dialogue management strategies in an implemented real-time spoken language system. The set of metrics we propose offers useful insights in evaluating how particular choices in the dialogue management can affect the overall quality of the man-machine dialogue. The evaluation makes use of established metrics: the transaction success, the contextual appropriateness of system answers, the calculation of normal and correction turns in a dialogue. We also define a new metric, the implicit recovery, which allows to measure the ability of a dialogue manager to deal with errors by different levels of analysis. We report evaluation data from several experiments, and we compare two different approaches to dialogue repair strategies using the set of metrics we argue for.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge