MementoML: Performance of selected machine learning algorithm configurations on OpenML100 datasets

Paper and Code

Aug 30, 2020

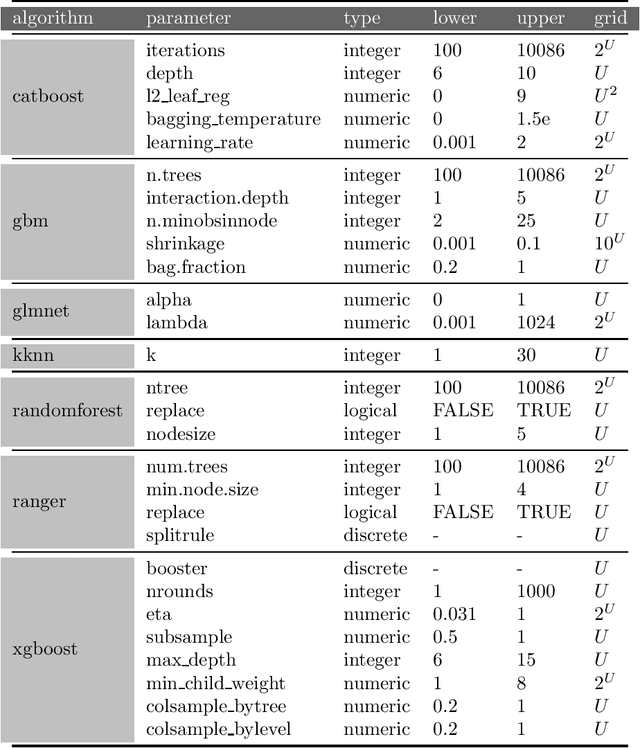

Finding optimal hyperparameters for the machine learning algorithm can often significantly improve its performance. But how to choose them in a time-efficient way? In this paper we present the protocol of generating benchmark data describing the performance of different ML algorithms with different hyperparameter configurations. Data collected in this way is used to study the factors influencing the algorithm's performance. This collection was prepared for the purposes of the study presented in the EPP study. We tested algorithms performance on dense grid of hyperparameters. Tested datasets and hyperparameters were chosen before any algorithm has run and were not changed. This is a different approach than the one usually used in hyperparameter tuning, where the selection of candidate hyperparameters depends on the results obtained previously. However, such selection allows for systematic analysis of performance sensitivity from individual hyperparameters. This resulted in a comprehensive dataset of such benchmarks that we would like to share. We hope, that computed and collected result may be helpful for other researchers. This paper describes the way data was collected. Here you can find benchmarks of 7 popular machine learning algorithms on 39 OpenML datasets. The detailed data forming this benchmark are available at: https://www.kaggle.com/mi2datalab/mementoml.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge