Manifold Free Riemannian Optimization

Paper and Code

Sep 07, 2022

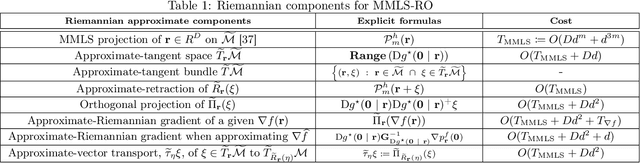

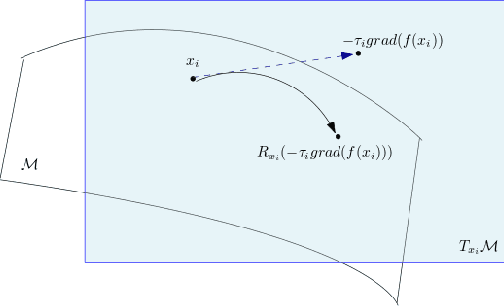

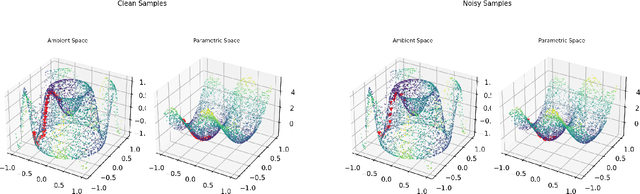

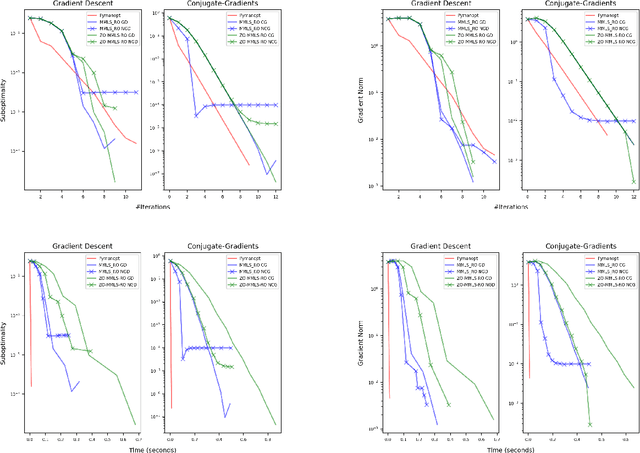

Riemannian optimization is a principled framework for solving optimization problems where the desired optimum is constrained to a smooth manifold $\mathcal{M}$. Algorithms designed in this framework usually require some geometrical description of the manifold, which typically includes tangent spaces, retractions, and gradients of the cost function. However, in many cases, only a subset (or none at all) of these elements can be accessed due to lack of information or intractability. In this paper, we propose a novel approach that can perform approximate Riemannian optimization in such cases, where the constraining manifold is a submanifold of $\R^{D}$. At the bare minimum, our method requires only a noiseless sample set of the cost function $(\x_{i}, y_{i})\in {\mathcal{M}} \times \mathbb{R}$ and the intrinsic dimension of the manifold $\mathcal{M}$. Using the samples, and utilizing the Manifold-MLS framework (Sober and Levin 2020), we construct approximations of the missing components entertaining provable guarantees and analyze their computational costs. In case some of the components are given analytically (e.g., if the cost function and its gradient are given explicitly, or if the tangent spaces can be computed), the algorithm can be easily adapted to use the accurate expressions instead of the approximations. We analyze the global convergence of Riemannian gradient-based methods using our approach, and we demonstrate empirically the strength of this method, together with a conjugate-gradients type method based upon similar principles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge