Making a Spiking Net Work: Robust brain-like unsupervised machine learning

Paper and Code

Aug 02, 2022

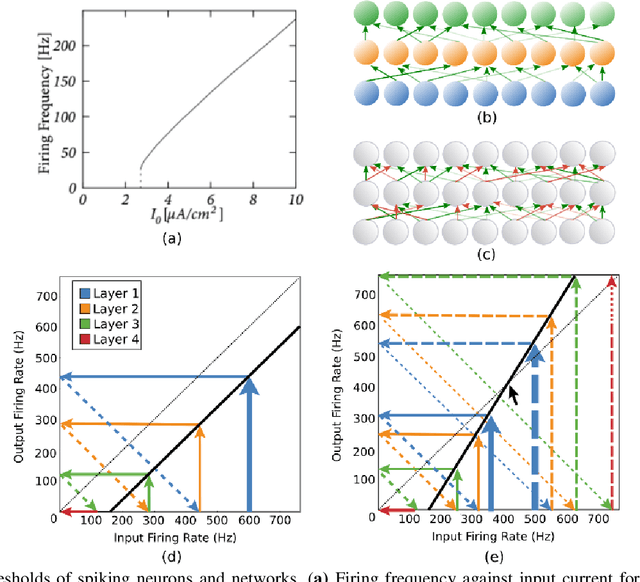

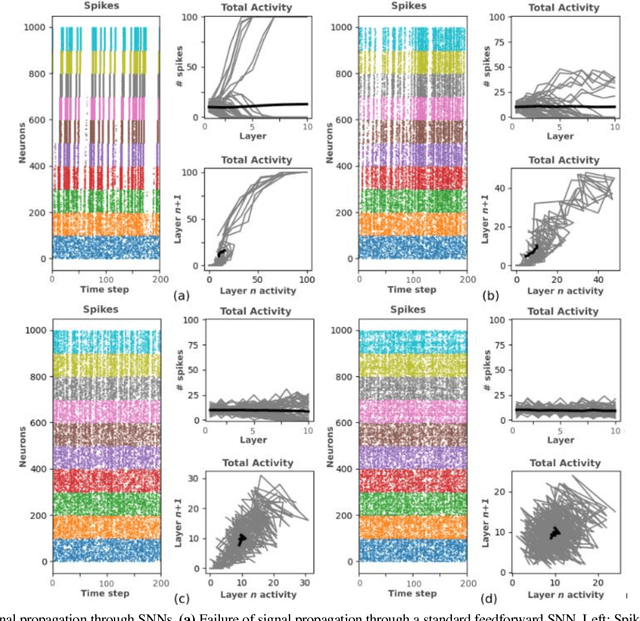

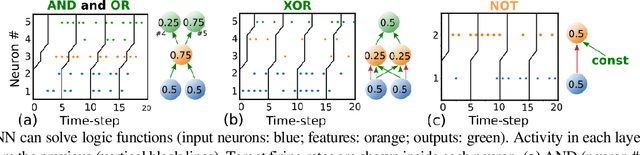

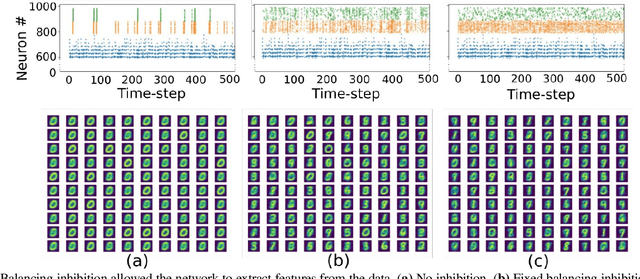

The surge in interest in Artificial Intelligence (AI) over the past decade has been driven almost exclusively by advances in Artificial Neural Networks (ANNs). While ANNs set state-of-the-art performance for many previously intractable problems, they require large amounts of data and computational resources for training, and since they employ supervised learning they typically need to know the correctly labelled response for every training example, limiting their scalability for real-world domains. Spiking Neural Networks (SNNs) are an alternative to ANNs that use more brain-like artificial neurons and can use unsupervised learning to discover recognizable features in the input data without knowing correct responses. SNNs, however, struggle with dynamical stability and cannot match the accuracy of ANNs. Here we show how an SNN can overcome many of the shortcomings that have been identified in the literature, including offering a principled solution to the vanishing spike problem, to outperform all existing shallow SNNs and equal the performance of an ANN. It accomplishes this while using unsupervised learning with unlabeled data and only 1/50th of the training epochs (labelled data is used only for a final simple linear readout layer). This result makes SNNs a viable new method for fast, accurate, efficient, explainable, and re-deployable machine learning with unlabeled datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge