Maintaining AUC and $H$-measure over time

Paper and Code

Dec 12, 2021

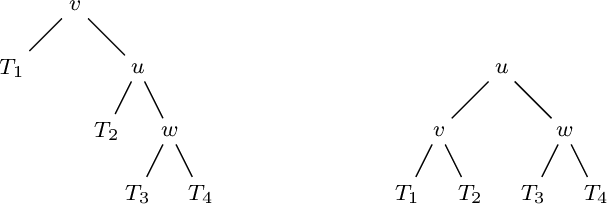

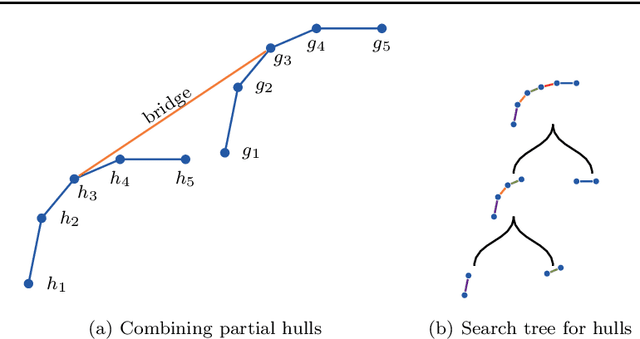

Measuring the performance of a classifier is a vital task in machine learning. The running time of an algorithm that computes the measure plays a very small role in an offline setting, for example, when the classifier is being developed by a researcher. However, the running time becomes more crucial if our goal is to monitor the performance of a classifier over time. In this paper we study three algorithms for maintaining two measures. The first algorithm maintains area under the ROC curve (AUC) under addition and deletion of data points in $O(\log n)$ time. This is done by maintaining the data points sorted in a self-balanced search tree. In addition, we augment the search tree that allows us to query the ROC coordinates of a data point in $O(\log n)$ time. In doing so we are able to maintain AUC in $O(\log n)$ time. Our next two algorithms involve in maintaining $H$-measure, an alternative measure based on the ROC curve. Computing the measure is a two-step process: first we need to compute a convex hull of the ROC curve, followed by a sum over the convex hull. We demonstrate that we can maintain the convex hull using a minor modification of the classic convex hull maintenance algorithm. We then show that under certain conditions, we can compute the $H$-measure exactly in $O(\log^2 n)$ time, and if the conditions are not met, then we can estimate the $H$-measure in $O((\log n + \epsilon^{-1})\log n)$ time. We show empirically that our methods are significantly faster than the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge