Machine learning moment closure models for the radiative transfer equation III: enforcing hyperbolicity and physical characteristic speeds

Paper and Code

Sep 02, 2021

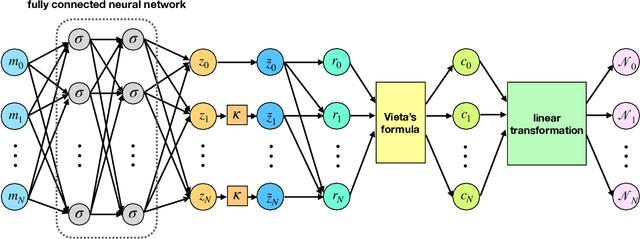

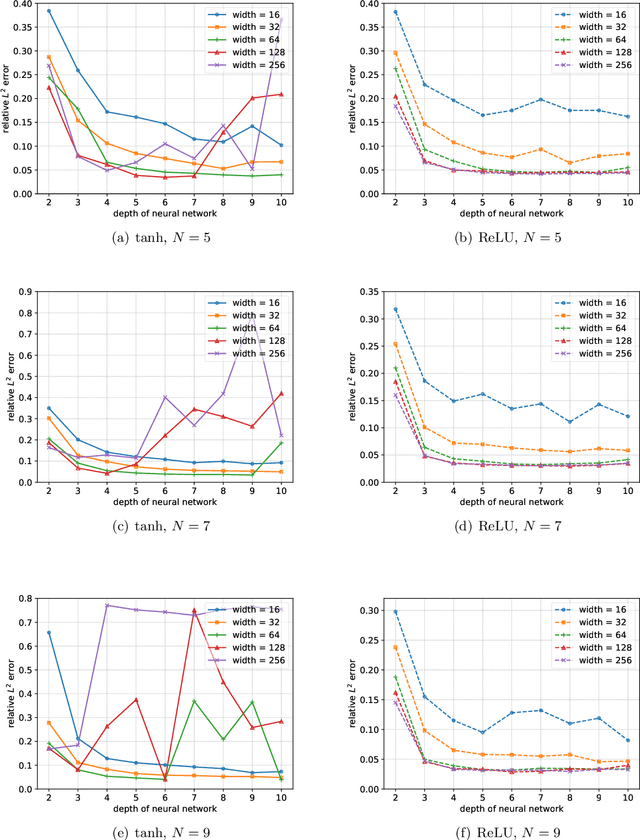

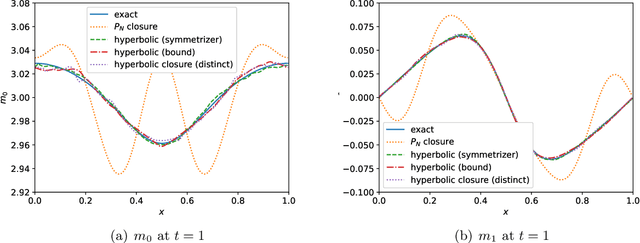

This is the third paper in a series in which we develop machine learning (ML) moment closure models for the radiative transfer equation (RTE). In our previous work \cite{huang2021gradient}, we proposed an approach to learn the gradient of the unclosed high order moment, which performs much better than learning the moment itself and the conventional $P_N$ closure. However, while the ML moment closure has better accuracy, it is not able to guarantee hyperbolicity and has issues with long time stability. In our second paper \cite{huang2021hyperbolic}, we identified a symmetrizer which leads to conditions that enforce that the gradient based ML closure is symmetrizable hyperbolic and stable over long time. The limitation of this approach is that in practice the highest moment can only be related to four, or fewer, lower moments. In this paper, we propose a new method to enforce the hyperbolicity of the ML closure model. Motivated by the observation that the coefficient matrix of the closure system is a lower Hessenberg matrix, we relate its eigenvalues to the roots of an associated polynomial. We design two new neural network architectures based on this relation. The ML closure model resulting from the first neural network is weakly hyperbolic and guarantees the physical characteristic speeds, i.e., the eigenvalues are bounded by the speed of light. The second model is strictly hyperbolic and does not guarantee the boundedness of the eigenvalues. Several benchmark tests including the Gaussian source problem and the two-material problem show the good accuracy, stability and generalizability of our hyperbolic ML closure model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge