Machine learning for public policy: Do we need to sacrifice accuracy to make models fair?

Paper and Code

Dec 05, 2020

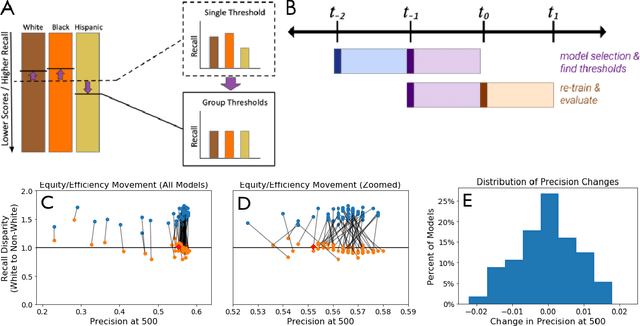

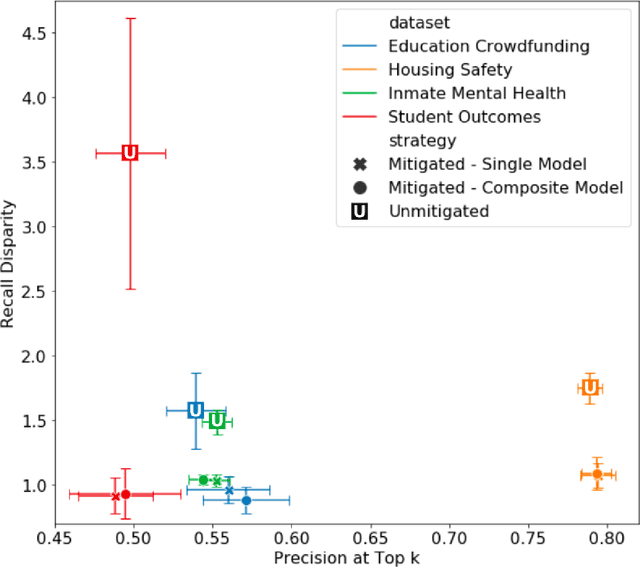

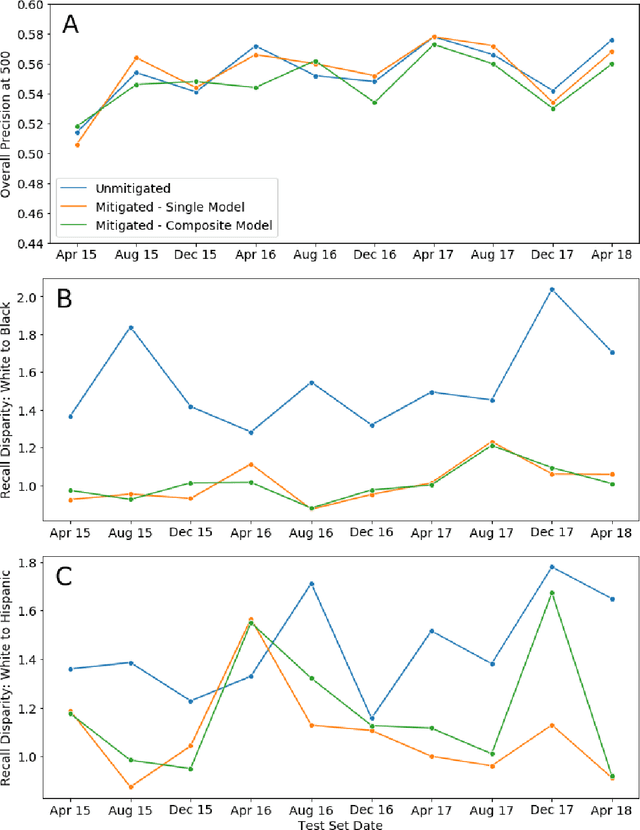

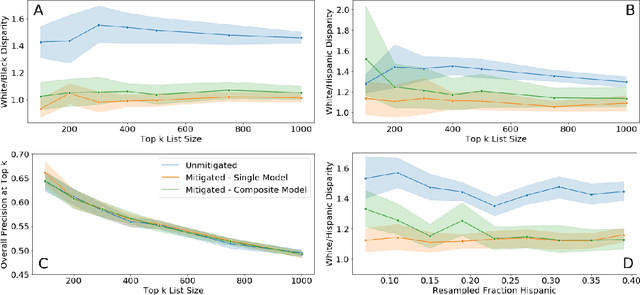

Growing applications of machine learning in policy settings have raised concern for fairness implications, especially for racial minorities, but little work has studied the practical trade-offs between fairness and accuracy in real-world settings. This empirical study fills this gap by investigating the accuracy cost of mitigating disparities across several policy settings, focusing on the common context of using machine learning to inform benefit allocation in resource-constrained programs across education, mental health, criminal justice, and housing safety. In each setting, explicitly focusing on achieving equity and using our proposed post-hoc disparity mitigation methods, fairness was substantially improved without sacrificing accuracy, challenging the commonly held assumption that reducing disparities either requires accepting an appreciable drop in accuracy or the development of novel, complex methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge