MAC-DO: Charge Based Multi-Bit Analog In-Memory Accelerator Compatible with DRAM Using Output Stationary Mapping

Paper and Code

Jul 16, 2022

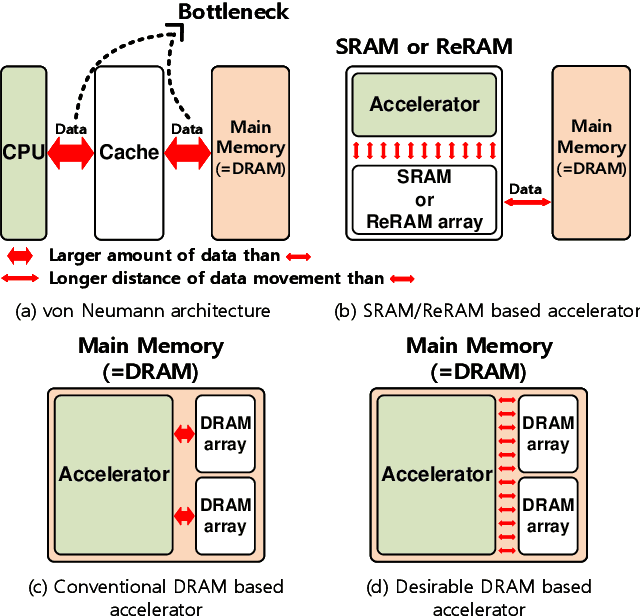

Deep neural networks (DNN) have been proved for its effectiveness in various areas such as classification problems, image processing, video segmentation, and speech recognition. The accelerator-in-memory (AiM) architectures are a promising solution to efficiently accelerate DNNs as they can avoid the memory bottleneck of the traditional von Neumann architecture. As the main memory is usually DRAM in many systems, a highly parallel multiply-accumulate (MAC) array within the DRAM can maximize the benefit of AiM by reducing both the distance and amount of data movement between the processor and the main memory. This paper presents an analog MAC array based AiM architecture named MAC-DO. In contrast with previous in-DRAM accelerators, MAC-DO makes an entire DRAM array participate in MAC computations simultaneously without idle cells, leading to higher throughput and energy efficiency. This improvement is made possible by exploiting a new analog computation method based on charge steering. In addition, MAC-DO innately supports multi-bit MACs with good linearity. MAC-DO is still compatible with current 1T1C DRAM technology without any modifications of a DRAM cell and array. A MAC-DO array can accelerate matrix multiplications based on output stationary mapping and thus supports most of the computations performed in DNNs. Our evaluation using transistor-level simulation shows that a test MAC-DO array with 16 x 16 MAC-DO cells achieves 188.7 TOPS/W, and shows 97.07% Top-1 accuracy for MNIST dataset without retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge