LUTNet: speeding up deep neural network inferencing via look-up tables

Paper and Code

May 25, 2019

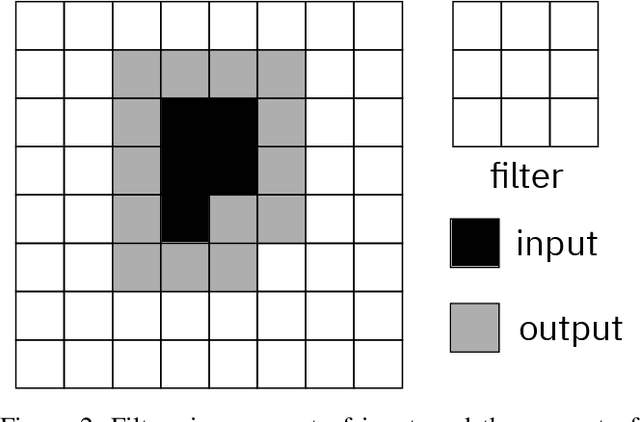

We consider the use of look-up tables (LUT) to speed up and simplify the hardware implementation of a deep learning network for inferencing after weights have been successfully trained. The use of LUT replaces the matrix multiply and add operations with a small number of LUTs and addition operations resulting in a multiplier-less implementation. We compare the different tradeoffs of this approach in terms of accuracy versus LUT size and the number of operations.

* 9 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge