Low-Depth Optical Neural Networks

Paper and Code

May 18, 2019

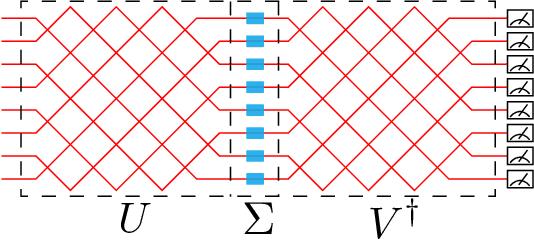

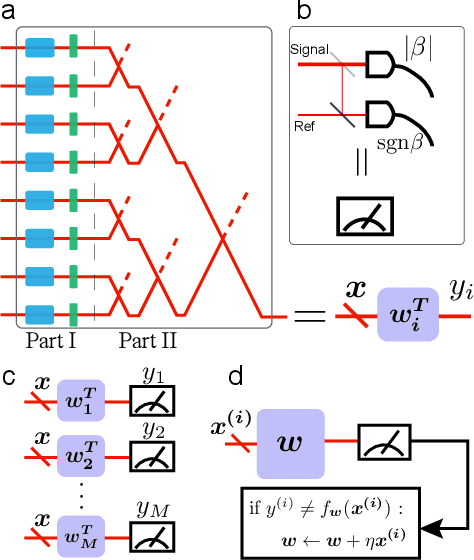

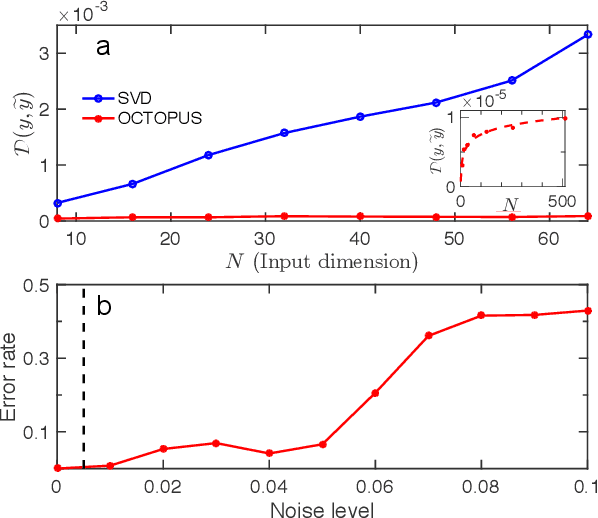

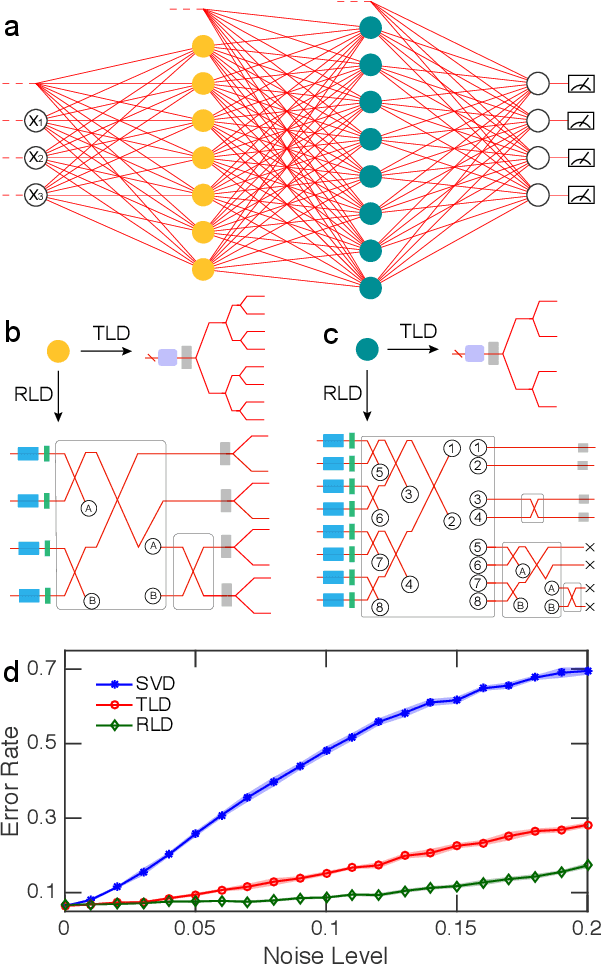

Optical neural network (ONN) is emerging as an attractive proposal for machine-learning applications, enabling high-speed computation with low-energy consumption. However, there are several challenges in applying ONN for industrial applications, including the realization of activation functions and maintaining stability. In particular, the stability of ONNs decrease with the circuit depth, limiting the scalability of the ONNs for practical uses. Here we demonstrate how to compress the circuit depth of ONN to scale only logarithmically, leading to an exponential gain in terms of noise robustness. Our low-depth (LD) ONN is based on an architecture, called Optical CompuTing Of dot-Product UnitS (OCTOPUS), which can also be applied individually as a linear perceptron for solving classification problems. Using the standard data set of Letter Recognition, we present numerical evidence showing that LD-ONN can exhibit a significant gain in noise robustness, compared with a previous ONN proposal based on singular-value decomposition [Nature Photonics 11, 441 (2017)].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge