Lossless Compression with Latent Variable Models

Paper and Code

Apr 22, 2021

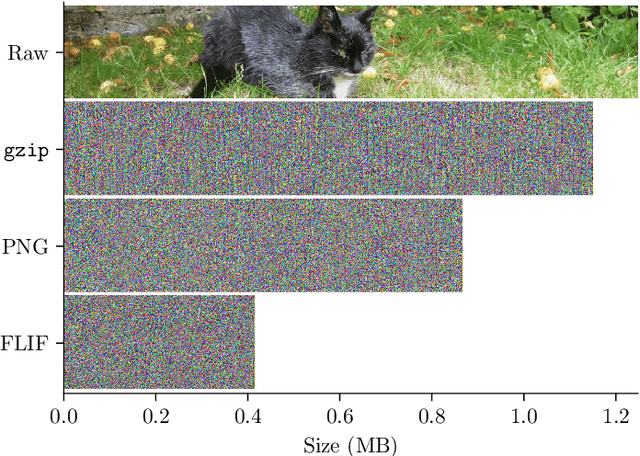

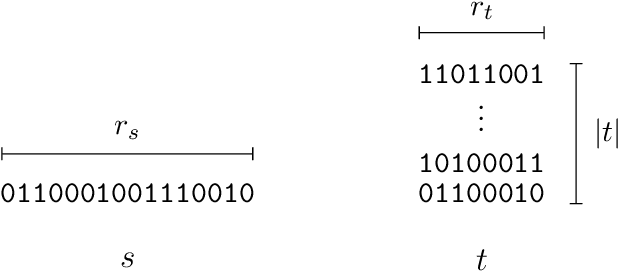

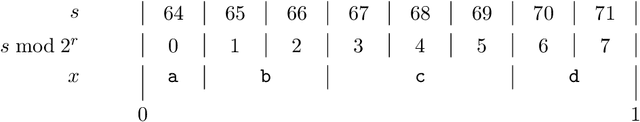

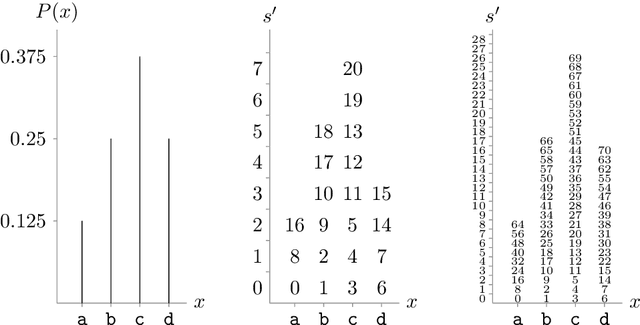

We develop a simple and elegant method for lossless compression using latent variable models, which we call 'bits back with asymmetric numeral systems' (BB-ANS). The method involves interleaving encode and decode steps, and achieves an optimal rate when compressing batches of data. We demonstrate it firstly on the MNIST test set, showing that state-of-the-art lossless compression is possible using a small variational autoencoder (VAE) model. We then make use of a novel empirical insight, that fully convolutional generative models, trained on small images, are able to generalize to images of arbitrary size, and extend BB-ANS to hierarchical latent variable models, enabling state-of-the-art lossless compression of full-size colour images from the ImageNet dataset. We describe 'Craystack', a modular software framework which we have developed for rapid prototyping of compression using deep generative models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge