Long Short-Term Memory for Spatial Encoding in Multi-Agent Path Planning

Paper and Code

Mar 21, 2022

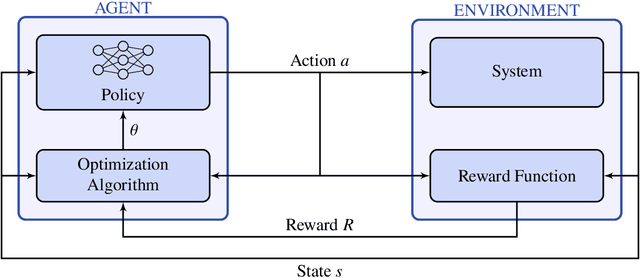

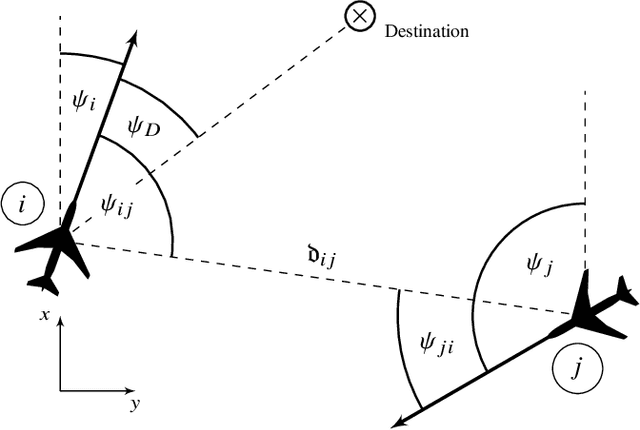

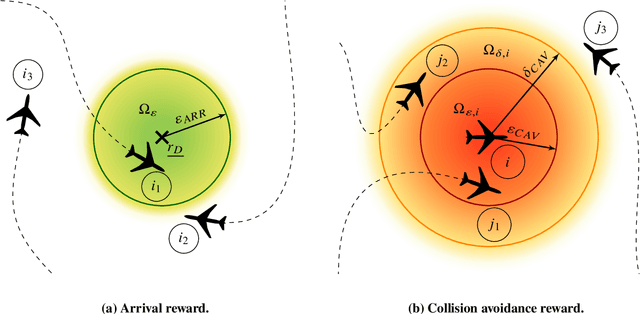

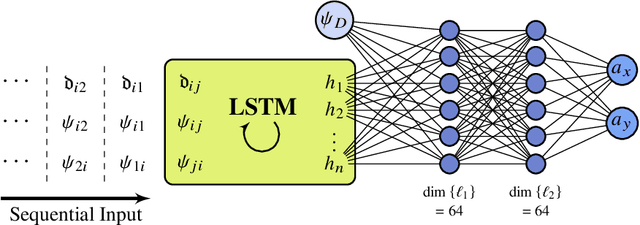

Reinforcement learning-based path planning for multi-agent systems of varying size constitutes a research topic with increasing significance as progress in domains such as urban air mobility and autonomous aerial vehicles continues. Reinforcement learning with continuous state and action spaces is used to train a policy network that accommodates desirable path planning behaviors and can be used for time-critical applications. A Long Short-Term Memory module is proposed to encode an unspecified number of states for a varying, indefinite number of agents. The described training strategies and policy architecture lead to a guidance that scales to an infinite number of agents and unlimited physical dimensions, although training takes place at a smaller scale. The guidance is implemented on a low-cost, off-the-shelf onboard computer. The feasibility of the proposed approach is validated by presenting flight test results of up to four drones, autonomously navigating collision-free in a real-world environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge