Locate&Edit: Energy-based Text Editing for Efficient, Flexible, and Faithful Controlled Text Generation

Paper and Code

Jun 30, 2024

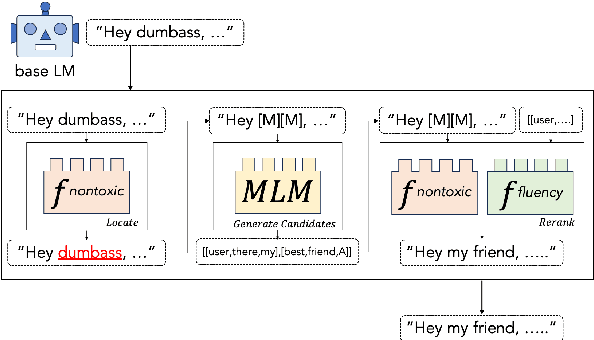

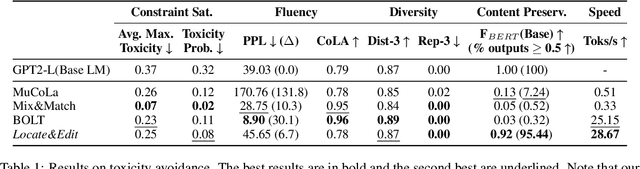

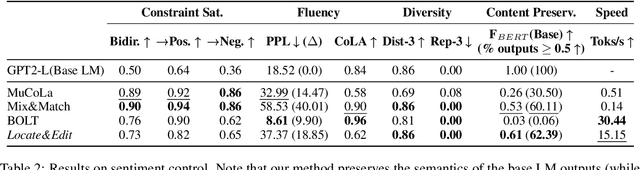

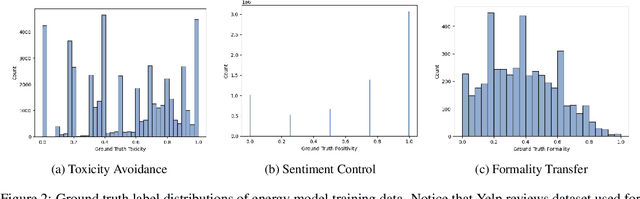

Recent approaches to controlled text generation (CTG) often involve manipulating the weights or logits of base language models (LMs) at decoding time. However, these methods are inapplicable to latest black-box LMs and ineffective at preserving the core semantics of the base LM's original generations. In this work, we propose Locate&Edit(L&E), an efficient and flexible energy-based approach to CTG, which edits text outputs from a base LM using off-the-shelf energy models. Given text outputs from the base LM, L&E first locates spans that are most relevant to constraints (e.g., toxicity) utilizing energy models, and then edits these spans by replacing them with more suitable alternatives. Importantly, our method is compatible with black-box LMs, as it requires only the text outputs. Also, since L&E doesn't mandate specific architecture for its component models, it can work with a diverse combination of available off-the-shelf models. Moreover, L&E preserves the base LM's original generations, by selectively modifying constraint-related aspects of the texts and leaving others unchanged. These targeted edits also ensure that L&E operates efficiently. Our experiments confirm that L&E achieves superior semantic preservation of the base LM generations and speed, while simultaneously obtaining competitive or improved constraint satisfaction. Furthermore, we analyze how the granularity of energy distribution impacts CTG performance and find that fine-grained, regression-based energy models improve constraint satisfaction, compared to conventional binary classifier energy models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge