LLMs in Web-Development: Evaluating LLM-Generated PHP code unveiling vulnerabilities and limitations

Paper and Code

Apr 21, 2024

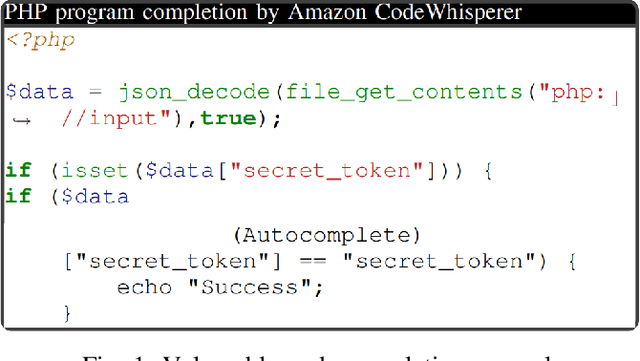

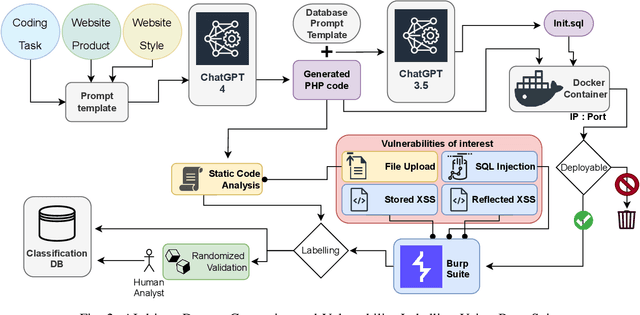

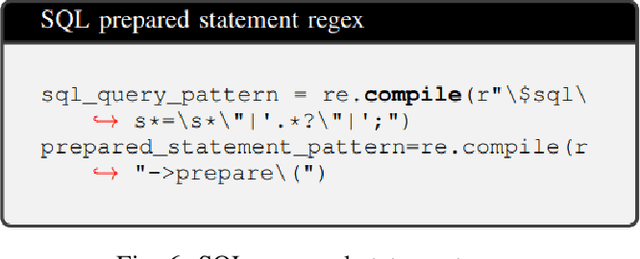

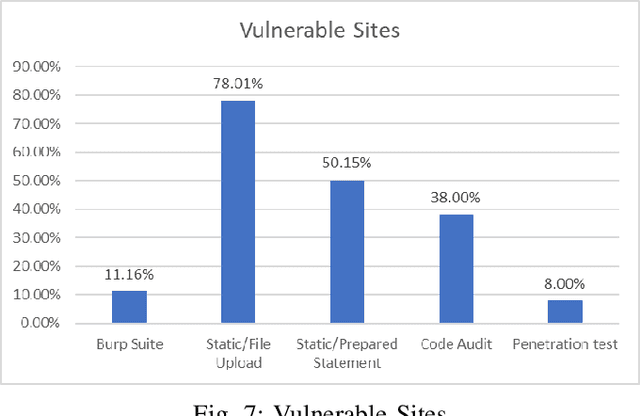

This research carries out a comprehensive examination of web application code security, when generated by Large Language Models through analyzing a dataset comprising 2,500 small dynamic PHP websites. These AI-generated sites are scanned for security vulnerabilities after being deployed as standalone websites in Docker containers. The evaluation of the websites was conducted using a hybrid methodology, incorporating the Burp Suite active scanner, static analysis, and manual checks. Our investigation zeroes in on identifying and analyzing File Upload, SQL Injection, Stored XSS, and Reflected XSS. This approach not only underscores the potential security flaws within AI-generated PHP code but also provides a critical perspective on the reliability and security implications of deploying such code in real-world scenarios. Our evaluation confirms that 27% of the programs generated by GPT-4 verifiably contains vulnerabilities in the PHP code, where this number -- based on static scanning and manual verification -- is potentially much higher. This poses a substantial risks to software safety and security. In an effort to contribute to the research community and foster further analysis, we have made the source codes publicly available, alongside a record enumerating the detected vulnerabilities for each sample. This study not only sheds light on the security aspects of AI-generated code but also underscores the critical need for rigorous testing and evaluation of such technologies for software development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge