Linking Generative Adversarial Learning and Binary Classification

Paper and Code

Sep 05, 2017

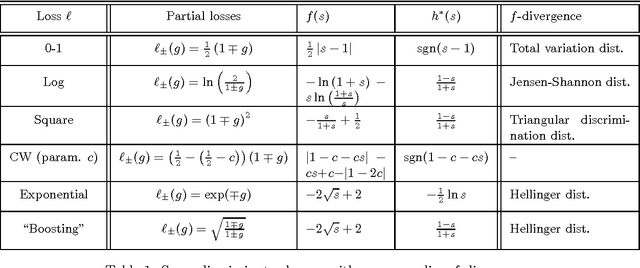

In this note, we point out a basic link between generative adversarial (GA) training and binary classification -- any powerful discriminator essentially computes an (f-)divergence between real and generated samples. The result, repeatedly re-derived in decision theory, has implications for GA Networks (GANs), providing an alternative perspective on training f-GANs by designing the discriminator loss function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge