Limited Gradient Descent: Learning With Noisy Labels

Paper and Code

Dec 06, 2018

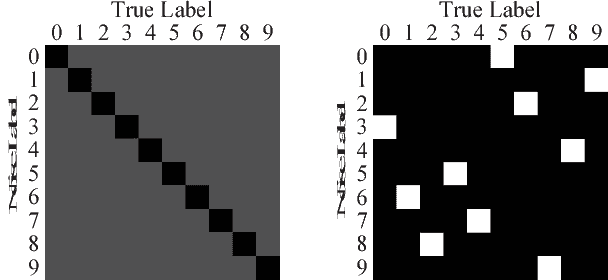

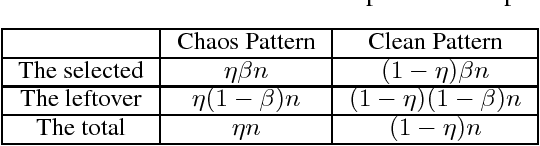

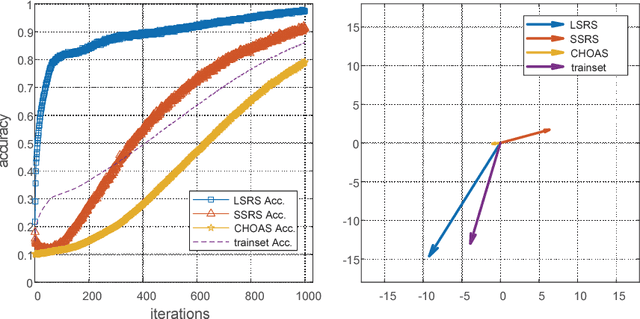

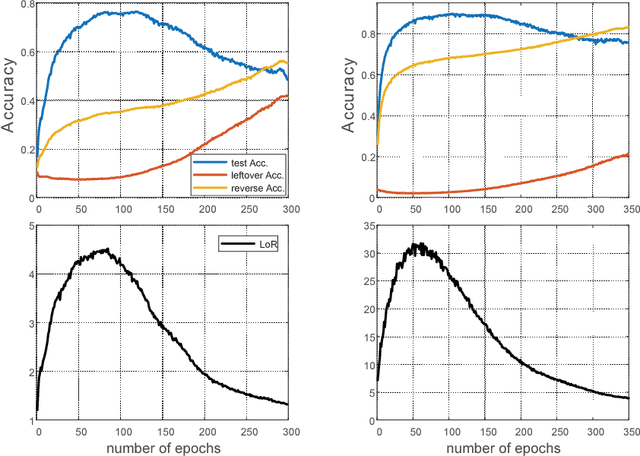

Label noise may handicap the generalization of classifiers, and the effective learning of the main pattern from samples with noisy labels is an important issue. Recent studies have shown that deep neural networks tend to prioritize the learning of simple patterns over the memorization of noise patterns. This suggests the need for a method to search for the best generalization that learns the main pattern until noise begins to be memorized. An intuitive idea is to use a supervised approach to find the stop timing of learning by, for example, employing a clean verification set. In practice, however, a clean verification set is sometimes difficult to obtain. To solve this problem, we propose an unsupervised method called limited gradient descent to estimate the best stop timing. We modified the labels of a few samples in a noisy dataset to be almost false labels, creating a reverse pattern. By monitoring the learning progresses of the noisy samples and the reverse samples, we could determine the stop timing of learning. In this paper, we also provide some sufficient conditions on learning with noisy labels. Experimental results on CIFAR-10 demonstrate that our approach has a similar generalization performance to supervised methods. For uncomplicated datasets, such as MNIST, we add a relabeling strategy to further improve generalization and achieve state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge