Leveraging Pre-Images to Discover Nonlinear Relationships in Multivariate Environments

Paper and Code

Jun 01, 2021

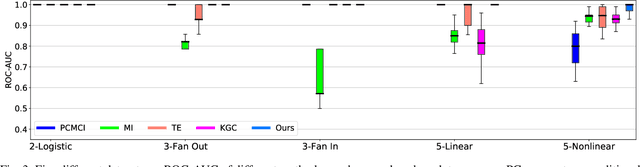

Causal discovery, beyond the inference of a network as a collection of connected dots, offers a crucial functionality in scientific discovery using artificial intelligence. The questions that arise in multiple domains, such as physics, physiology, the strategic decision in uncertain environments with multiple agents, climatology, among many others, have roots in causality and reasoning. It became apparent that many real-world temporal observations are nonlinearly related to each other. While the number of observations can be as high as millions of points, the number of temporal samples can be minimal due to ethical or practical reasons, leading to the curse-of-dimensionality in large-scale systems. This paper proposes a novel method using kernel principal component analysis and pre-images to obtain nonlinear dependencies of multivariate time-series data. We show that our method outperforms state-of-the-art causal discovery methods when the observations are restricted by time and are nonlinearly related. Extensive simulations on both real-world and synthetic datasets with various topologies are provided to evaluate our proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge