Leaving Some Facial Features Behind

Paper and Code

Oct 29, 2024

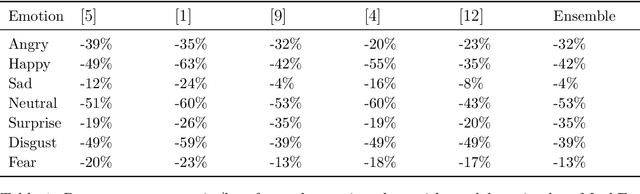

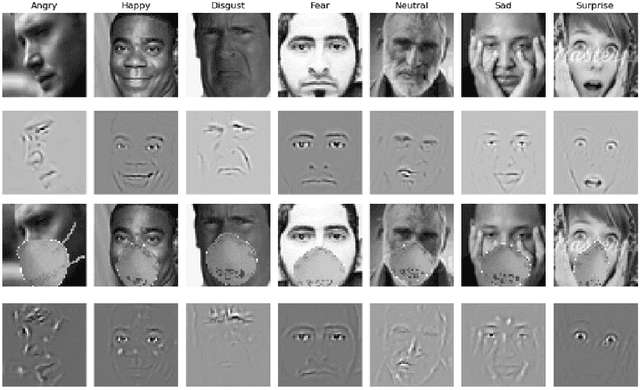

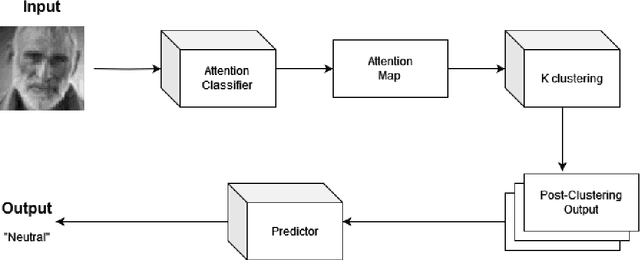

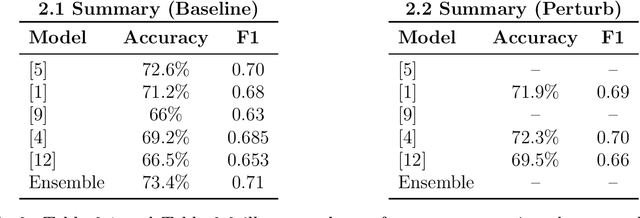

Facial expressions are crucial to human communication, offering insights into emotional states. This study examines how specific facial features influence emotion classification, using facial perturbations on the Fer2013 dataset. As expected, models trained on data with the removal of some important facial feature experienced up to an 85% accuracy drop when compared to baseline for emotions like happy and surprise. Surprisingly, for the emotion disgust, there seem to be slight improvement in accuracy for classifier after mask have been applied. Building on top of this observation, we applied a training scheme to mask out facial features during training, motivating our proposed Perturb Scheme. This scheme, with three phases-attention-based classification, pixel clustering, and feature-focused training, demonstrates improvements in classification accuracy. The experimental results obtained suggests there are some benefits to removing individual facial features in emotion recognition tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge