Learning to track environment state via predictive autoencoding

Paper and Code

Dec 14, 2021

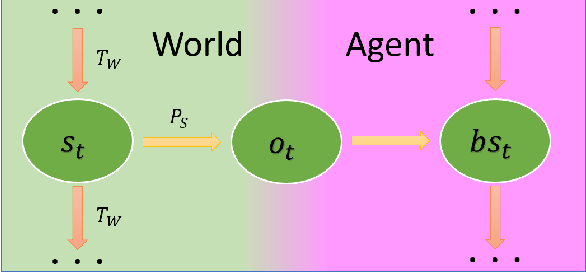

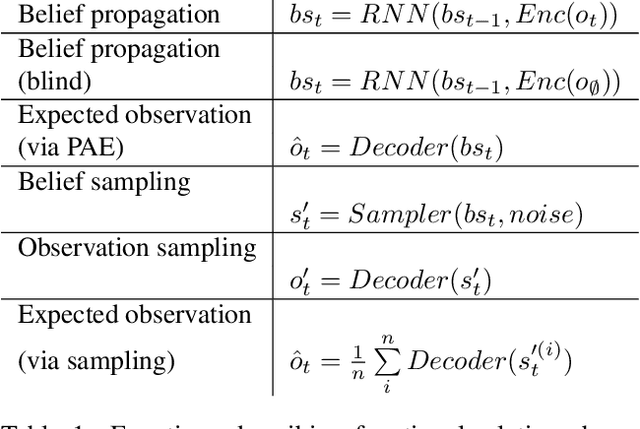

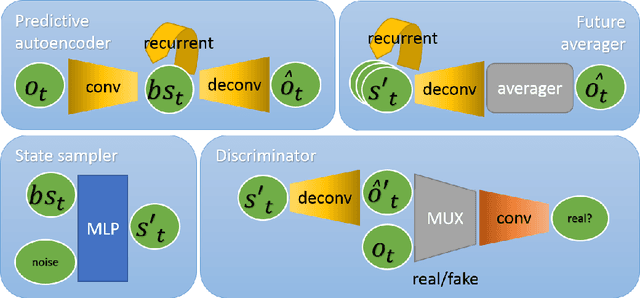

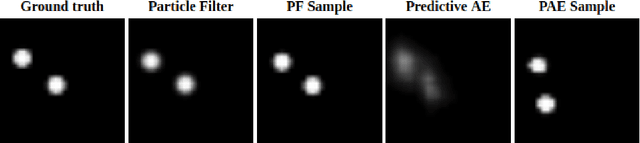

This work introduces a neural architecture for learning forward models of stochastic environments. The task is achieved solely through learning from temporal unstructured observations in the form of images. Once trained, the model allows for tracking of the environment state in the presence of noise or with new percepts arriving intermittently. Additionally, the state estimate can be propagated in observation-blind mode, thus allowing for long-term predictions. The network can output both expectation over future observations and samples from belief distribution. The resulting functionalities are similar to those of a Particle Filter (PF). The architecture is evaluated in an environment where we simulate objects moving. As the forward and sensor models are available, we implement a PF to gauge the quality of the models learnt from the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge