Learning to Reason from General Concepts to Fine-grained Tokens for Discriminative Phrase Detection

Paper and Code

Dec 06, 2021

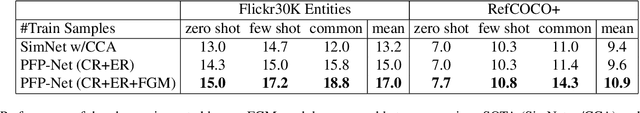

Phrase detection requires methods to identify if a phrase is relevant to an image and then localize it if applicable. A key challenge in training more discriminative phrase detection models is sampling hard-negatives. This is because few phrases are annotated of the nearly infinite variations that may be applicable. To address this problem, we introduce PFP-Net, a phrase detector that differentiates between phrases through two novel methods. First, we group together phrases of related objects into coarse groups of visually coherent concepts (eg animals vs automobiles), and then train our PFP-Net to discriminate between them according to their concept membership. Second, for phrases containing fine grained mutually-exclusive tokens (eg colors), we force the model into selecting only one applicable phrase for each region. We evaluate our approach on the Flickr30K Entities and RefCOCO+ datasets, where we improve mAP over the state-of-the-art by 1-1.5 points over all phrases on this challenging task. When considering only the phrases affected by our fine-grained reasoning module, we improve by 1-4 points on both datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge