Learning to Control using Image Feedback

Paper and Code

Oct 28, 2021

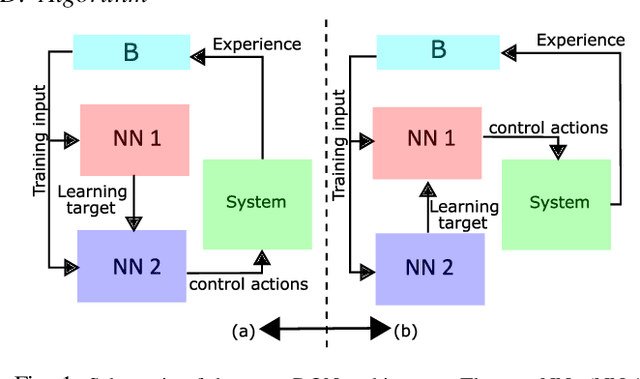

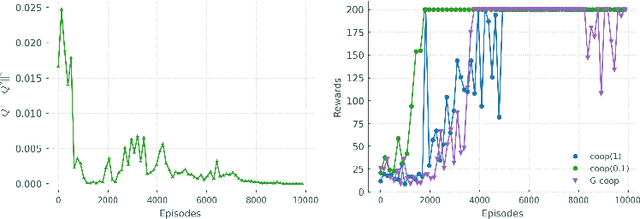

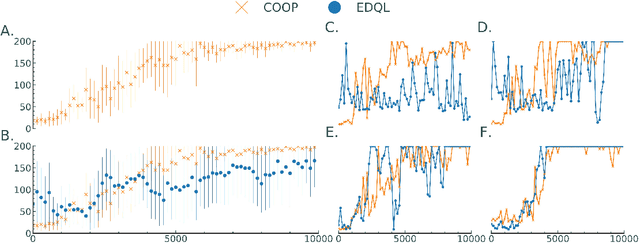

Learning to control complex systems using non-traditional feedback, e.g., in the form of snapshot images, is an important task encountered in diverse domains such as robotics, neuroscience, and biology (cellular systems). In this paper, we present a two neural-network (NN)-based feedback control framework to design control policies for systems that generate feedback in the form of images. In particular, we develop a deep $Q$-network (DQN)-driven learning control strategy to synthesize a sequence of control inputs from snapshot images that encode the information pertaining to the current state and control action of the system. Further, to train the networks we employ a direct error-driven learning (EDL) approach that utilizes a set of linear transformations of the NN training error to update the NN weights in each layer. We verify the efficacy of the proposed control strategy using numerical examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge