Learning to Combat Noisy Labels via Classification Margins

Paper and Code

Feb 01, 2021

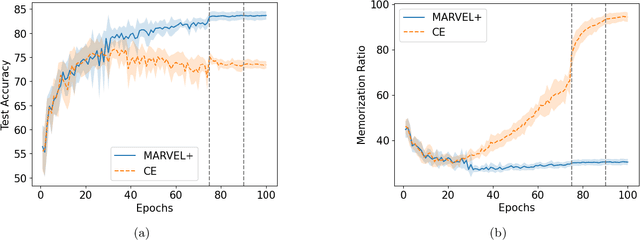

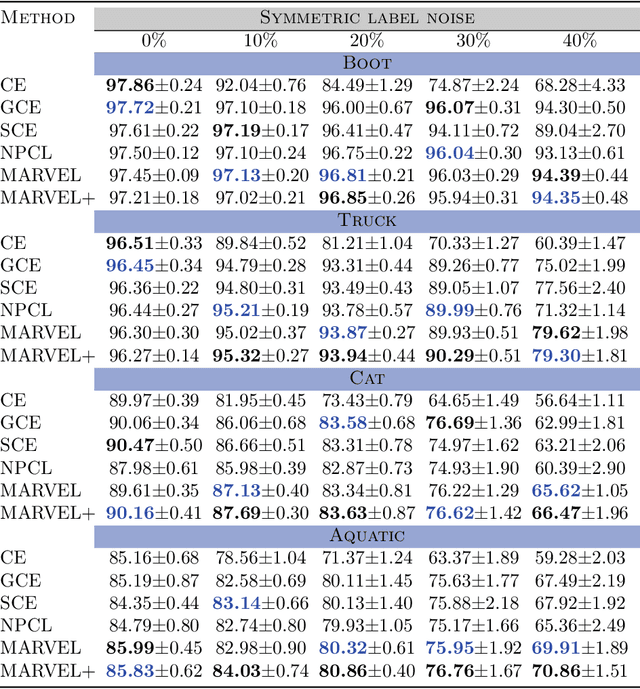

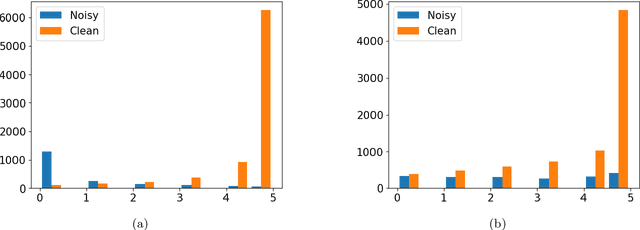

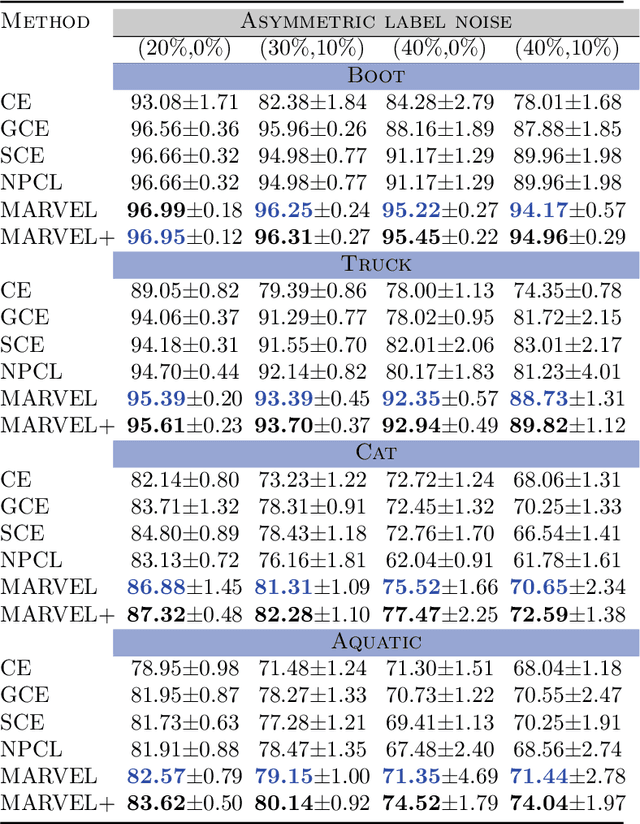

A deep neural network trained on noisy labels is known to quickly lose its power to discriminate clean instances from noisy ones. After the early learning phase has ended, the network memorizes the noisy instances, which leads to a degradation in generalization performance. To resolve this issue, we propose MARVEL (MARgins Via Early Learning), where we track the goodness of "fit" for every instance by maintaining an epoch-history of its classification margins. Based on consecutive negative margins, we discard suspected noisy instances by zeroing out their weights. In addition, MARVEL+ upweights arduous instances enabling the network to learn a more nuanced representation of the classification boundary. Experimental results on benchmark datasets with synthetic label noise show that MARVEL outperforms other baselines consistently across different noise levels, with a significantly larger margin under asymmetric noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge