Learning to acquire novel cognitive tasks with evolution, plasticity and meta-meta-learning

Paper and Code

Jan 17, 2022

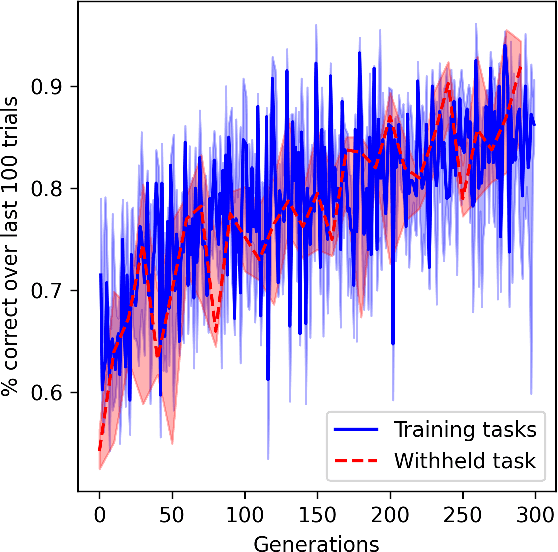

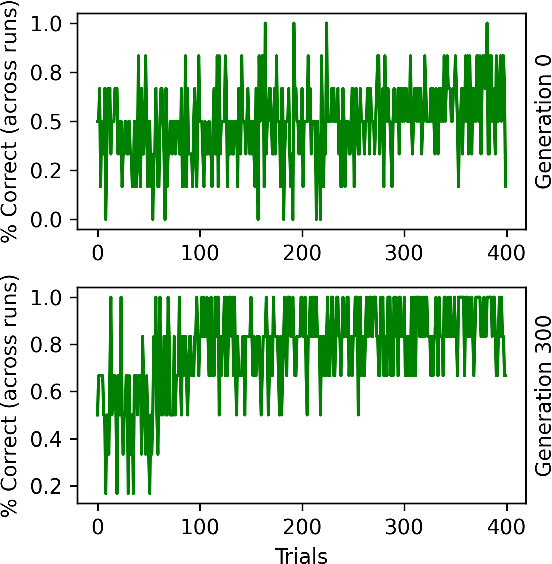

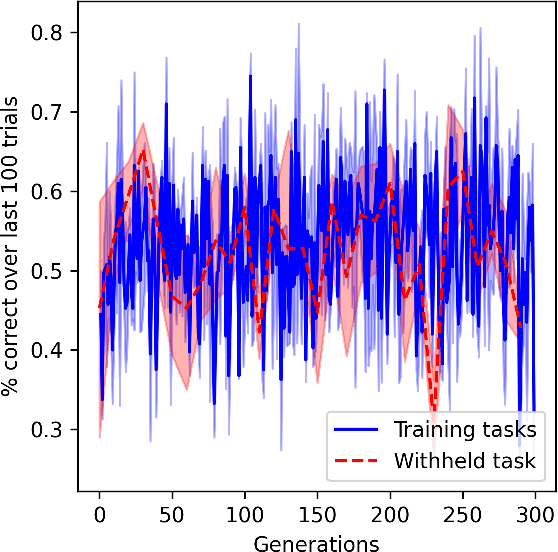

In meta-learning, networks are trained with external algorithms to learn tasks that require acquiring, storing and exploiting unpredictable information for each new instance of the task. However, animals are able to pick up such cognitive tasks automatically, as a result of their evolved neural architecture and synaptic plasticity mechanisms. Here we evolve neural networks, endowed with plastic connections, over a sizeable set of simple meta-learning tasks based on a framework from computational neuroscience. The resulting evolved network can automatically acquire a novel simple cognitive task, never seen during training, through the spontaneous operation of its evolved neural organization and plasticity structure. We suggest that attending to the multiplicity of loops involved in natural learning may provide useful insight into the emergence of intelligent behavior

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge