Learning the Ising Model with Generative Neural Networks

Paper and Code

Jan 15, 2020

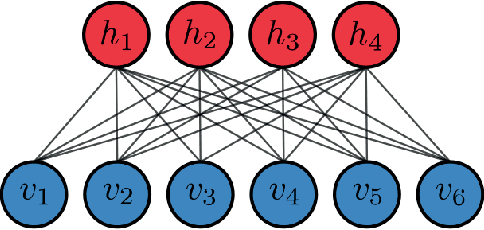

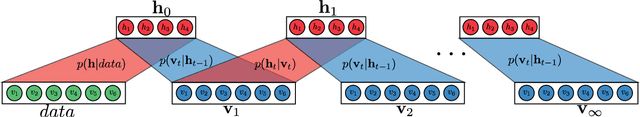

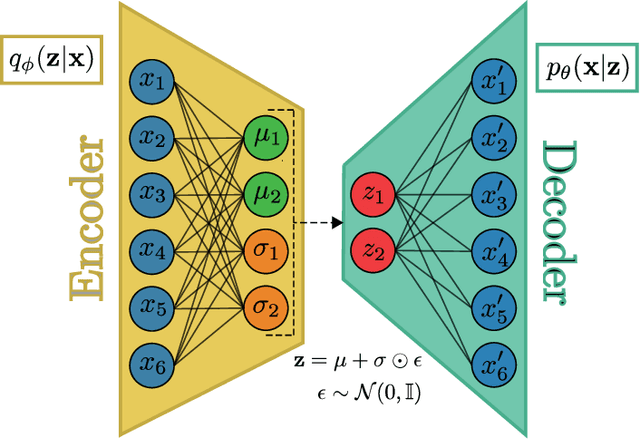

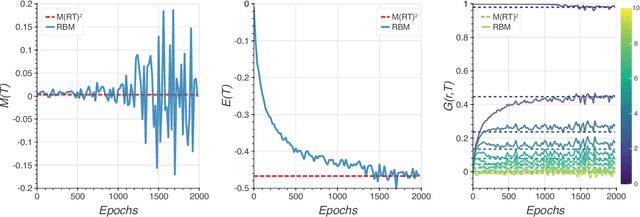

Recent advances in deep learning and neural networks have led to an increased interest in the application of generative models in statistical and condensed matter physics. In particular, restricted Boltzmann machines (RBMs) and variational autoencoders (VAEs) as specific classes of neural networks have been successfully applied in the context of physical feature extraction and representation learning. Despite these successes, however, there is only limited understanding of their representational properties and limitations. To better understand the representational characteristics of both generative neural networks, we study the ability of single RBMs and VAEs to capture physical features of the Ising model at different temperatures. This approach allows us to quantitatively assess learned representations by comparing sample features with corresponding theoretical predictions. Our results suggest that the considered RBMs and convolutional VAEs are able to capture the temperature dependence of magnetization, energy, and spin-spin correlations. The samples generated by RBMs are more evenly distributed across temperature than those of VAEs. We also find that convolutional layers in VAEs are important to model spin correlations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge