Learning Shape Features and Abstractions in 3D Convolutional Neural Networks for Detecting Alzheimer's Disease

Paper and Code

Sep 10, 2020

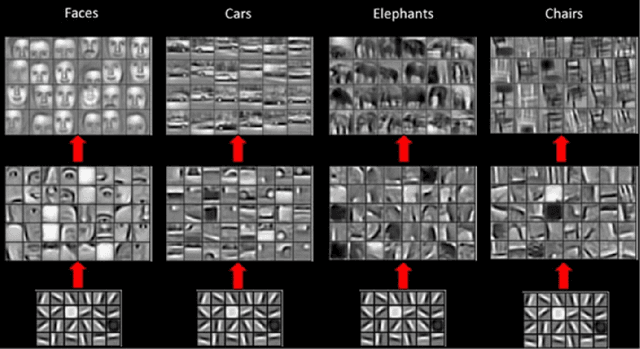

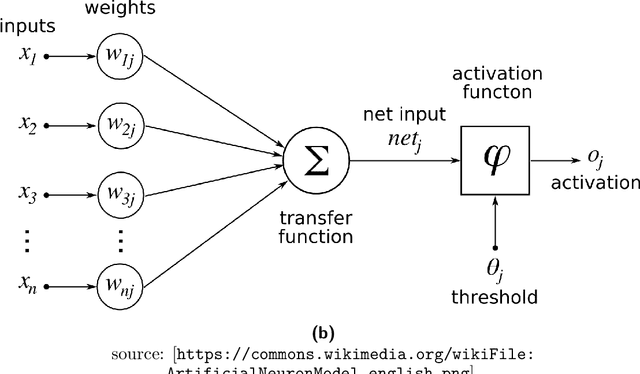

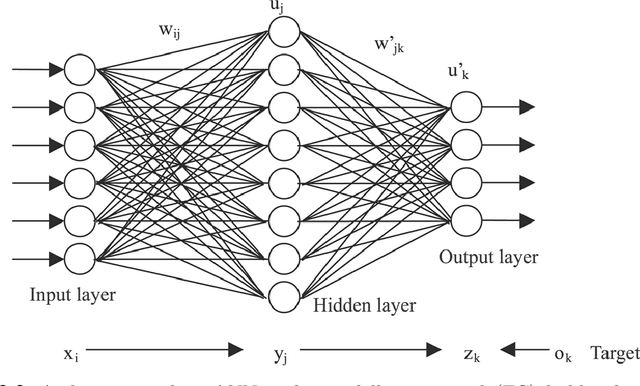

Deep Neural Networks - especially Convolutional Neural Network (ConvNet) has become the state-of-the-art for image classification, pattern recognition and various computer vision tasks. ConvNet has a huge potential in medical domain for analyzing medical data to diagnose diseases in an efficient way. Based on extracted features by ConvNet model from MRI data, early diagnosis is very crucial for preventing progress and treating the Alzheimer's disease. Despite having the ability to deliver great performance, absence of interpretability of the model's decision can lead to misdiagnosis which can be life threatening. In this thesis, learned shape features and abstractions by 3D ConvNets for detecting Alzheimer's disease were investigated using various visualization techniques. How changes in network structures, used filters sizes and filters shapes affects the overall performance and learned features of the model were also inspected. LRP relevance map of different models revealed which parts of the brain were more relevant for the classification decision. Comparing the learned filters by Activation Maximization showed how patterns were encoded in different layers of the network. Finally, transfer learning from a convolutional autoencoder was implemented to check whether increasing the number of training samples with patches of input to extract the low-level features improves learned features and the model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge