Learning recurrent dynamics in spiking networks

Paper and Code

Aug 18, 2018

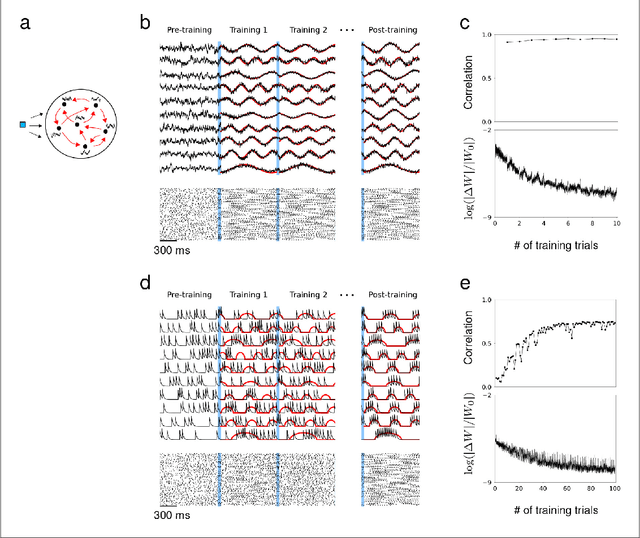

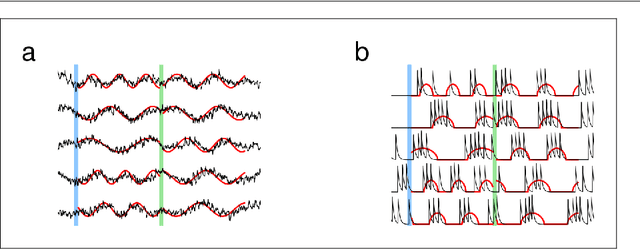

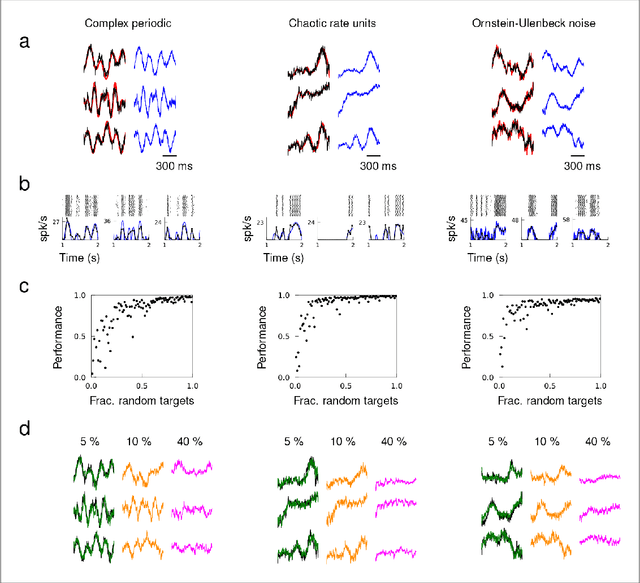

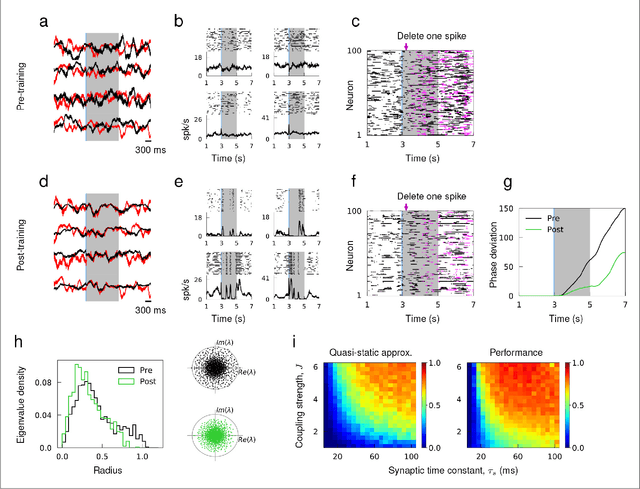

Spiking activity of neurons engaged in learning and performing a task show complex spatiotemporal dynamics. While the output of recurrent network models can learn to perform various tasks, the possible range of recurrent dynamics that emerge after learning remains unknown. Here we show that modifying the recurrent connectivity with a recursive least squares algorithm provides sufficient flexibility for synaptic and spiking rate dynamics of spiking networks to produce a wide range of spatiotemporal activity. We apply the training method to learn arbitrary firing patterns, stabilize irregular spiking activity of a balanced network, and reproduce the heterogeneous spiking rate patterns of cortical neurons engaged in motor planning and movement. We identify sufficient conditions for successful learning, characterize two types of learning errors, and assess the network capacity. Our findings show that synaptically-coupled recurrent spiking networks possess a vast computational capability that can support the diverse activity patterns in the brain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge