Learning Parse and Translation Decisions From Examples With Rich Context

Paper and Code

Jun 30, 1997

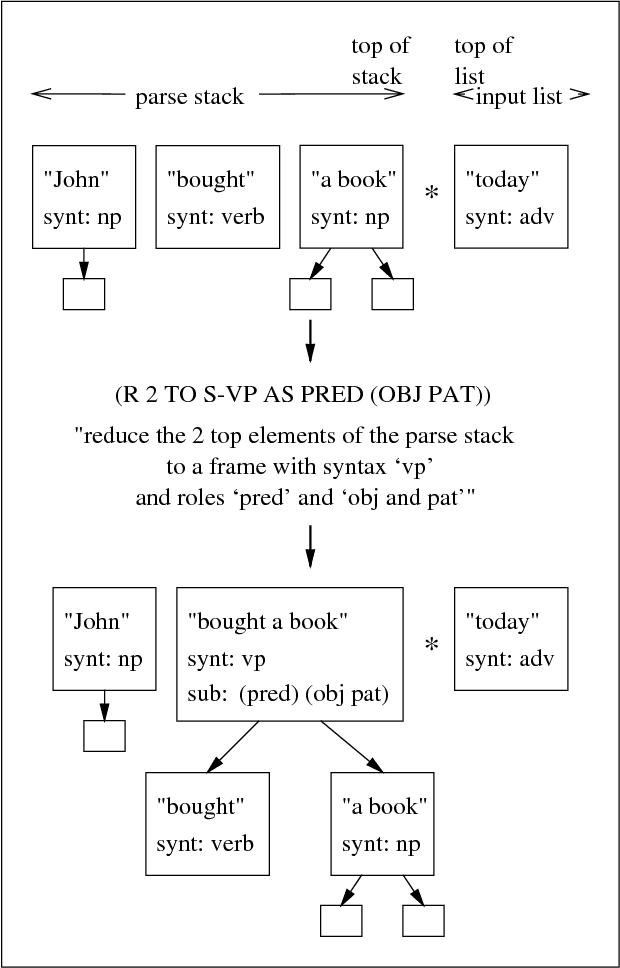

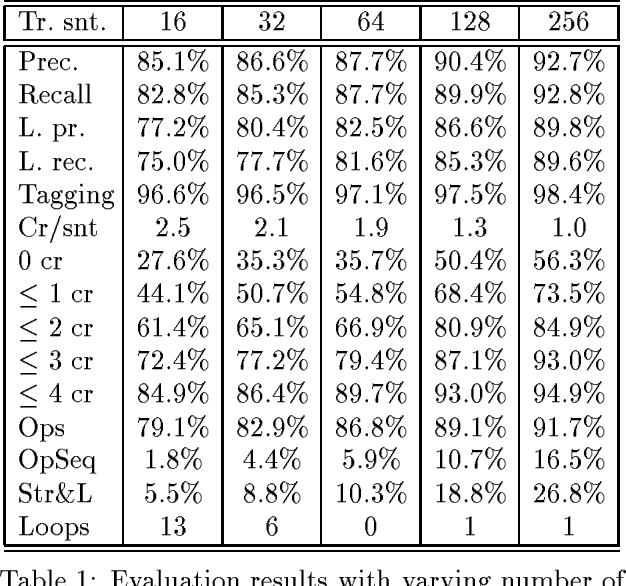

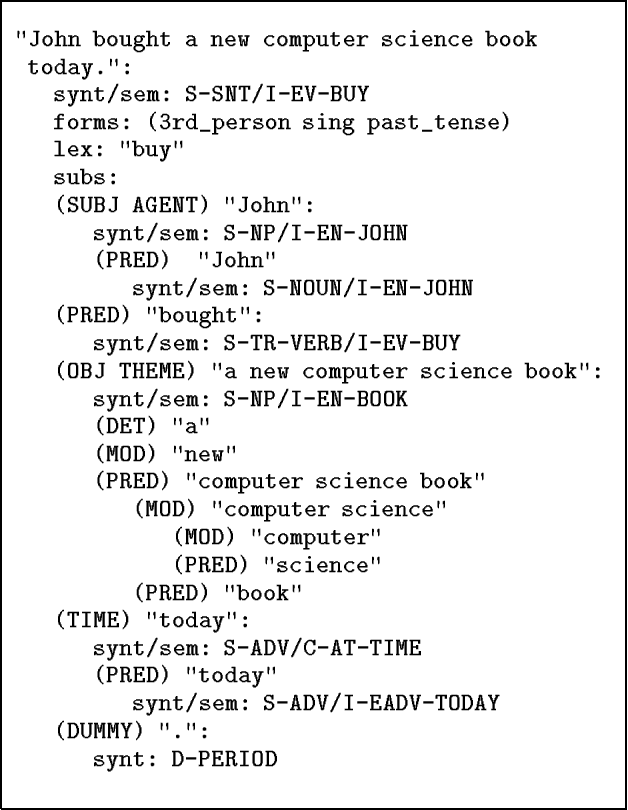

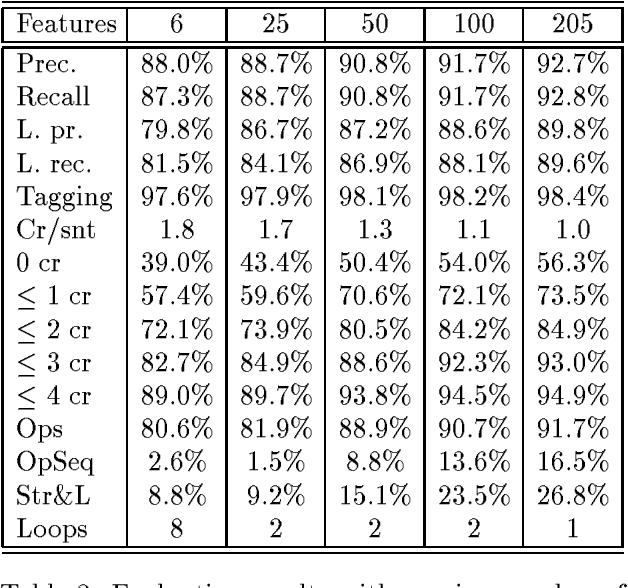

We propose a system for parsing and translating natural language that learns from examples and uses some background knowledge. As our parsing model we choose a deterministic shift-reduce type parser that integrates part-of-speech tagging and syntactic and semantic processing. Applying machine learning techniques, the system uses parse action examples acquired under supervision to generate a parser in the form of a decision structure, a generalization of decision trees. To learn good parsing and translation decisions, our system relies heavily on context, as encoded in currently 205 features describing the morphological, syntactical and semantical aspects of a given parse state. Compared with recent probabilistic systems that were trained on 40,000 sentences, our system relies on more background knowledge and a deeper analysis, but radically fewer examples, currently 256 sentences. We test our parser on lexically limited sentences from the Wall Street Journal and achieve accuracy rates of 89.8% for labeled precision, 98.4% for part of speech tagging and 56.3% of test sentences without any crossing brackets. Machine translations of 32 Wall Street Journal sentences to German have been evaluated by 10 bilingual volunteers and been graded as 2.4 on a 1.0 (best) to 6.0 (worst) scale for both grammatical correctness and meaning preservation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge