Learning Distributions by Generative Adversarial Networks: Approximation and Generalization

Paper and Code

May 25, 2022

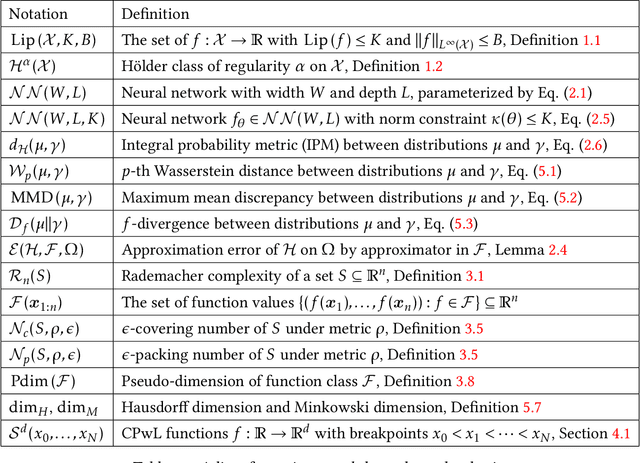

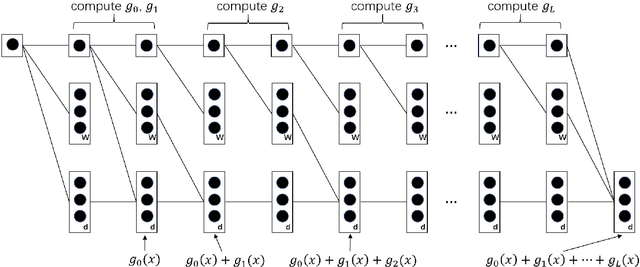

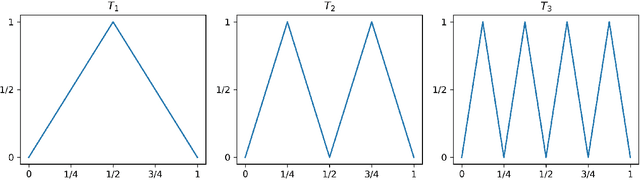

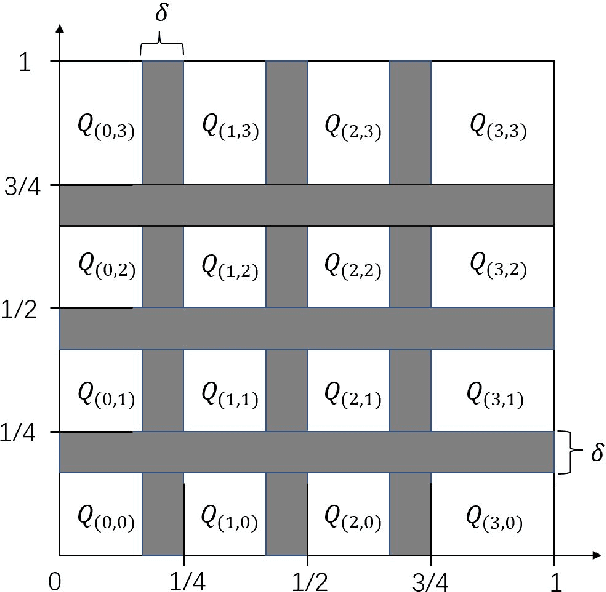

We study how well generative adversarial networks (GAN) learn probability distributions from finite samples by analyzing the convergence rates of these models. Our analysis is based on a new oracle inequality that decomposes the estimation error of GAN into the discriminator and generator approximation errors, generalization error and optimization error. To estimate the discriminator approximation error, we establish error bounds on approximating H\"older functions by ReLU neural networks, with explicit upper bounds on the Lipschitz constant of the network or norm constraint on the weights. For generator approximation error, we show that neural network can approximately transform a low-dimensional source distribution to a high-dimensional target distribution and bound such approximation error by the width and depth of neural network. Combining the approximation results with generalization bounds of neural networks from statistical learning theory, we establish the convergence rates of GANs in various settings, when the error is measured by a collection of integral probability metrics defined through H\"older classes, including the Wasserstein distance as a special case. In particular, for distributions concentrated around a low-dimensional set, we show that the convergence rates of GANs do not depend on the high ambient dimension, but on the lower intrinsic dimension.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge