Learning Attribute Representation for Human Activity Recognition

Paper and Code

Feb 02, 2018

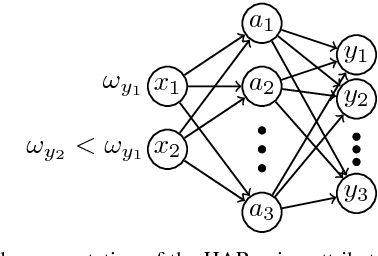

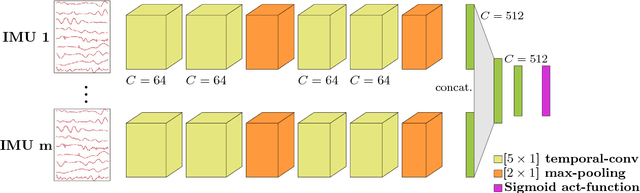

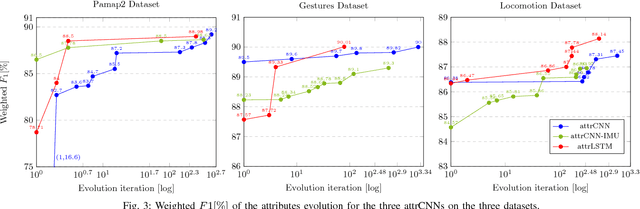

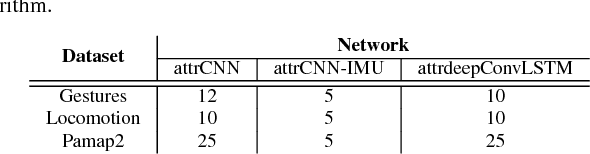

Attribute representations became relevant in image recognition and word spotting, providing support under the presence of unbalance and disjoint datasets. However, for human activity recognition using sequential data from on-body sensors, human-labeled attributes are lacking. This paper introduces a search for attributes that represent favorably signal segments for recognizing human activities. It presents three deep architectures, including temporal-convolutions and an IMU centered design, for predicting attributes. An empiric evaluation of random and learned attribute representations, and as well as the networks is carried out on two datasets, outperforming the state-of-the art.

* 6 pages, submitted to ICPR 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge