Lazy Transformation-Based Learning

Paper and Code

Jun 03, 1998

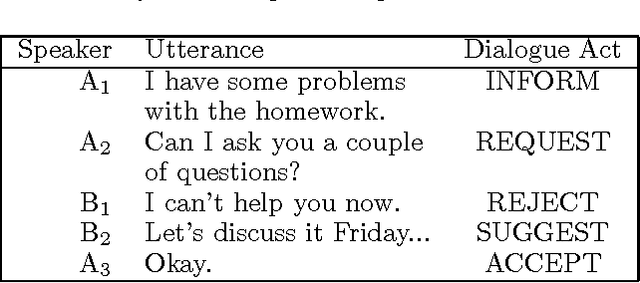

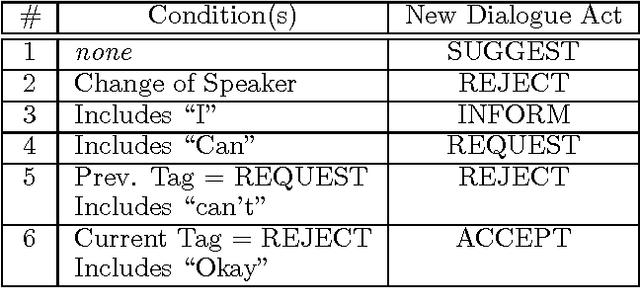

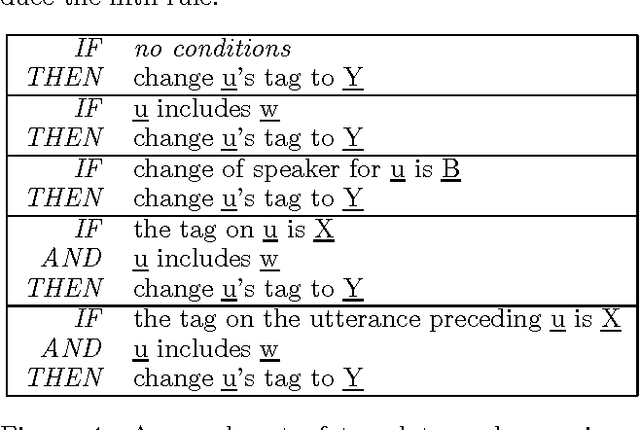

We introduce a significant improvement for a relatively new machine learning method called Transformation-Based Learning. By applying a Monte Carlo strategy to randomly sample from the space of rules, rather than exhaustively analyzing all possible rules, we drastically reduce the memory and time costs of the algorithm, without compromising accuracy on unseen data. This enables Transformation- Based Learning to apply to a wider range of domains, as it can effectively consider a larger number of different features and feature interactions in the data. In addition, the Monte Carlo improvement decreases the labor demands on the human developer, who no longer needs to develop a minimal set of rule templates to maintain tractability.

* Proceedings of the 11th International Florida Artificial

Intelligence Research Symposium Conference * 5 pages, 4 Postscript figures, uses aaai.sty and aaai.bst

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge